Data quality assurance guide for modern data teams

Learn how data quality assurance improves accuracy, consistency, and trust across your data ecosystem. Explore best practices and real-world strategies.

What is data quality assurance?

Data quality assurance is how an organization proactively maintains its data and all related systems to ensure that data is accurate, complete, and reliable. Without data quality assurance, data can become stale, come up missing, or be so untimely that it is more than useless to your organization — inaccurate data can be downright dangerous.

Data quality assurance (DQA) differs from Data quality control (DQC) because of that proactive approach. Whereas quality control is correcting errors once they have been identified, DQA is the process where a team continually combs its data through documented measures to ensure the trustworthiness of the data at all times.

Why data quality assurance matters

Data quality assurance helps any organization build a collaborative environment where quality data is prioritized across all departments. Reliable data means that decision-makers can act on the information at hand, knowing they can trust the information in front of them. By improving the overall data quality, companies position themselves to act when they need to, instead of needing to fix errors or validate reports before they can decide on and execute a strategy.

DQA also assists organizations with their compliance and reporting. In continuously cleansing data and making sure the data is complete and accurate, regular data validation keeps compliance reports in top-shape all year long. AI-based data quality tools like Ataccama ONE help execute the proactive work of data quality control.

Key dimensions of data quality assurance

The dimensions of data quality assurance change based on the use-case, but are generally considered to be standardized around eight or nine criteria that evaluate whether or not data is able to be used in a way that benefits the organization.

According to Gartner, the dimensions of data quality assurance include:

- Accessibility. Data is retrievable and ready to use.

- Accuracy. Data is accurate.

- Completeness. Data is not missing fields, etc.

- Consistency. Data is structured the same across the whole organization.

- Precision. Data is precise from end-to-end.

- Relevancy. Data is used by one or more business parts.

- Timeliness. Data is current enough to positively impact decision-making.

- Uniqueness. Data is not duplicated across the enterprise.

- Validity. Data is verifiable and matches business requirements.

An organization will choose the data quality tools they use based on what their priorities are for their data.

Core components of a data quality assurance framework

One of the first steps to implement data assurance is having a data quality framework. A data quality framework is the tools and protocols an organization uses in order to improve overall data quality within their organization.

Managing data in an ad-hoc manner is a sure way to spend a lot of time and money on miserably poor results. An organization that is interested in making data quality a priority should be willing to implement a company-wide data quality framework in order to meet business goals and achieve success in a global market.

A data quality framework defines the priorities your organization has regarding its data and the ways it plans to address quality assurance over time.

This data quality framework may contain:

- Data profiling where data is categorized and assessed.

- Tools to assess the nine data quality dimensions listed above.

- Writing data quality rules for how data should be handled and by whom.

- Creating and enforcing data governance, or the processes that exist within your data landscape and which roles are responsible for them.

- Data cleansing to clean up what might be considered “dirty” data and improve what assets are there.

- Continuous monitoring that helps to automatically monitor all data for issues.

When comprehensive data observability tools are in use, all of these different aspects of controlling data quality work together to ensure end-to-end accuracy for the entire data pipeline.

The data quality assurance process

Implementing a process that assures data quality integrity is a multi-step endeavor.

Step 1 – Data profiling and assessment

First, assess the data that you do have. Is it accurate? What’s missing? What do you have? What do you wish that you had?

Once you’ve profiled the data that is there, you can see what the structure of the data is, how it’s organized, and what gaps exist. This gives you an idea of where you are starting from, and paves the way to improve on your current data set and data quality processes moving forward.

Step 2 – Data cleansing and validation

Data cleansing identifies and processes errors. These errors can include inconsistencies, inaccuracies, duplicates, and more. By addressing errors and validating the data that is there, you improve data quality and consistency. Through data cleansing, inaccurate or missing data can be replaced with consistent data that can be harnessed for business use cases.

Data cleansing often involves the following tasks:

- Modifying or deleting corrupt data.

- Correcting typos and other data entry mistakes.

- Eliminating redundancies.

- Filling in missing information when possible.

- Data standardization to prepare for A.I. uses.

- Decluttering outdated or unnecessary data sets.

Data cleansing improves the accuracy of your data, enhances the consistency, and boosts the reliability of the data that you have. In addition, it makes sure that data doesn’t “rot” away in unseen spaces, taking up valuable storage space.

Step 3 – Monitoring and reporting

Once data profiling and assessment and data cleansing and validation occur, you can begin using data monitoring to continuously track and evaluate your data for reliability and accuracy, improving quality with each cleared error.

As your data quality improves, data reporting will help you transform this higher-quality data into the structured reports and dashboards that executives rely on to make decisions for the business.

Data monitoring and data reporting work together: the monitoring creates trustworthy data, and the reporting makes the data usable.

Step 4 – Continuous improvement and governance

The final step in a data quality assurance process is implementing continuous improvement and company-wide data governance. Data governance is the policies, roles, and standards that allow you to roll-out your data prioritization plan across your organization so that all teams can be on the same page in creating and maintaining usable, high-quality data. Implementing these data governance policies can help democratize data across your organization and ensure that data-driven decision making is happening wherever and whenever possible.

Best practices to elevate data quality assurance

There are a few best practices to help elevate data quality assurance processes.

Start where you are

One of the best ways to elevate your data quality is to start from where you are. Evaluate your current data quality status:

- How does your organization handle data now?

- What kinds of processes do you have in place?

- What is working well?

- Where does your organization encounter speedbumps surrounding data?

- What goals do you have surrounding data?

Once you’ve got a handle on what your data capabilities are currently, you can assess your needs moving forward.

Foster a data-centric culture

Along with the Data Quality Assurance process steps above, fostering a data-centric or data-driven culture will communicate to everyone across all teams and departments that quality data is a company-wide responsibility. It is everyone’s job to do what they can to maintain the accuracy and integrity of the company’s data — including past, present, and future data.

This will entail training employees at all levels on what data quality looks like, helping them to understand what the data does and why it is important. In providing data quality training, companies can help each employee at every level understand their role in maintaining the health of your data, and therefore, the health of the organization and its future.

Have a data governance strategy

We mentioned this above, but it bears repeating: having a data governance strategy can make or break an organization’s ability to get a handle on their data and make it usable. Without clear data ownership, no one can take responsibility for data that falls short of quality standards. And without clear policies, there isn’t a way to ensure that each person does their part to keep your data in tip-top shape.

Invest in data quality tools

Using a data monitoring tool or software that is built to end-to-end data management is essential in the data-driven world that we live in. A.I.-assisted models that automate the time-intensive processes of data quality management can help cleanse and validate your data in a fraction of the time it would take the best engineers.

Create feedback loops and adapt as needed

There’s no finish line for data integrity. As soon as your data is clean, validated, and ready to report on… new data washes onto shore. We’re allowed to mix our metaphors here — the data landscape is changing so rapidly that a single one just doesn’t keep up.

So create feedback loops inside your teams. Periodically assess if the information available to different teams is enough (or too much), if they have the training they need to analyze that data, and if there is other data that would be more useful to track down instead.

A great data quality assurance process is structured enough to stand up to scrutiny, and nimble enough to handle the waves of data washing toward it.

Common challenges and how to overcome them

There are many common challenges that companies face when establishing a data quality process. Data teams often cite data silos, lack of resources, and a company culture that doesn’t see data quality as a priority. Many of these challenges can be overcome with a thorough data governance plan in place, as well as fostering a company culture that values high-quality data. A data-centric culture helps to bring diverse teams together across the organization and set them to working in the same direction.

Here are some data quality challenges and their solutions:

Challenge: Data silos

Solution: Centralize all data in a cloud-based system, as well as use data integration tools to connect different data sources. Promote a collaborative culture where all teams work together with standardized data formats and agreed-upon data governance plans.

Challenge: Lack of data-centric culture

Solution: Provide training at all levels of the company to help employees understand why high-quality data matters and what their role in maintaining the organization’s data quality looks like.

Challenge: Poor or nonexistent data governance rules

Solution: Assess current rules and implement standardized processes for dealing with data in every area of the business. Create ownership roles and empower all employees to impact the data and be impacted by the data.

Tools and technologies for data quality assurance

There are many tools and technologies for data quality assurance on the market today. These data tools perform tasks like data profiling and assessment, data cleansing and validation, data monitoring and reporting, and data governance and compliance. Some tools handle one aspect really well, and others specialize in a different niche.

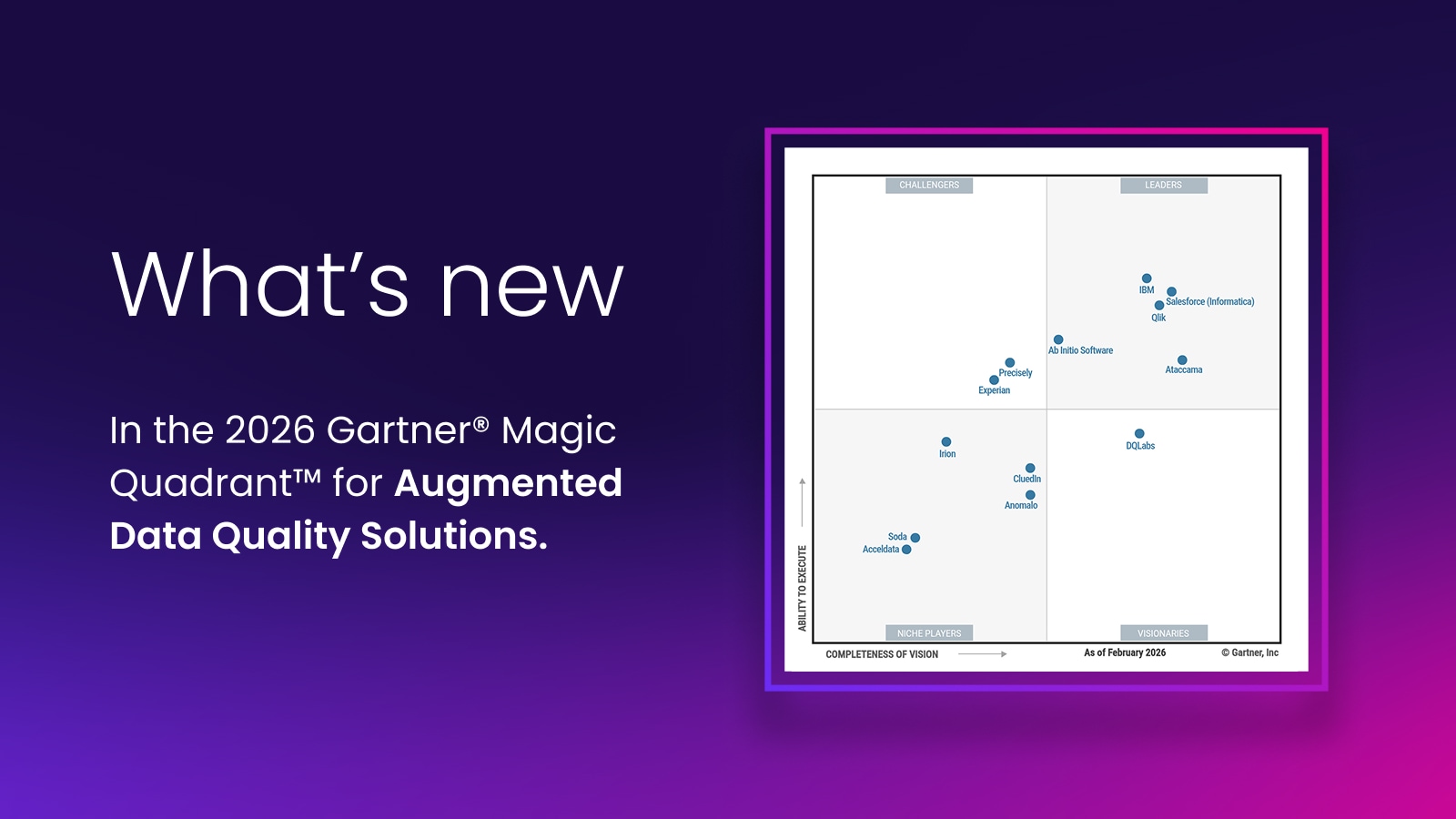

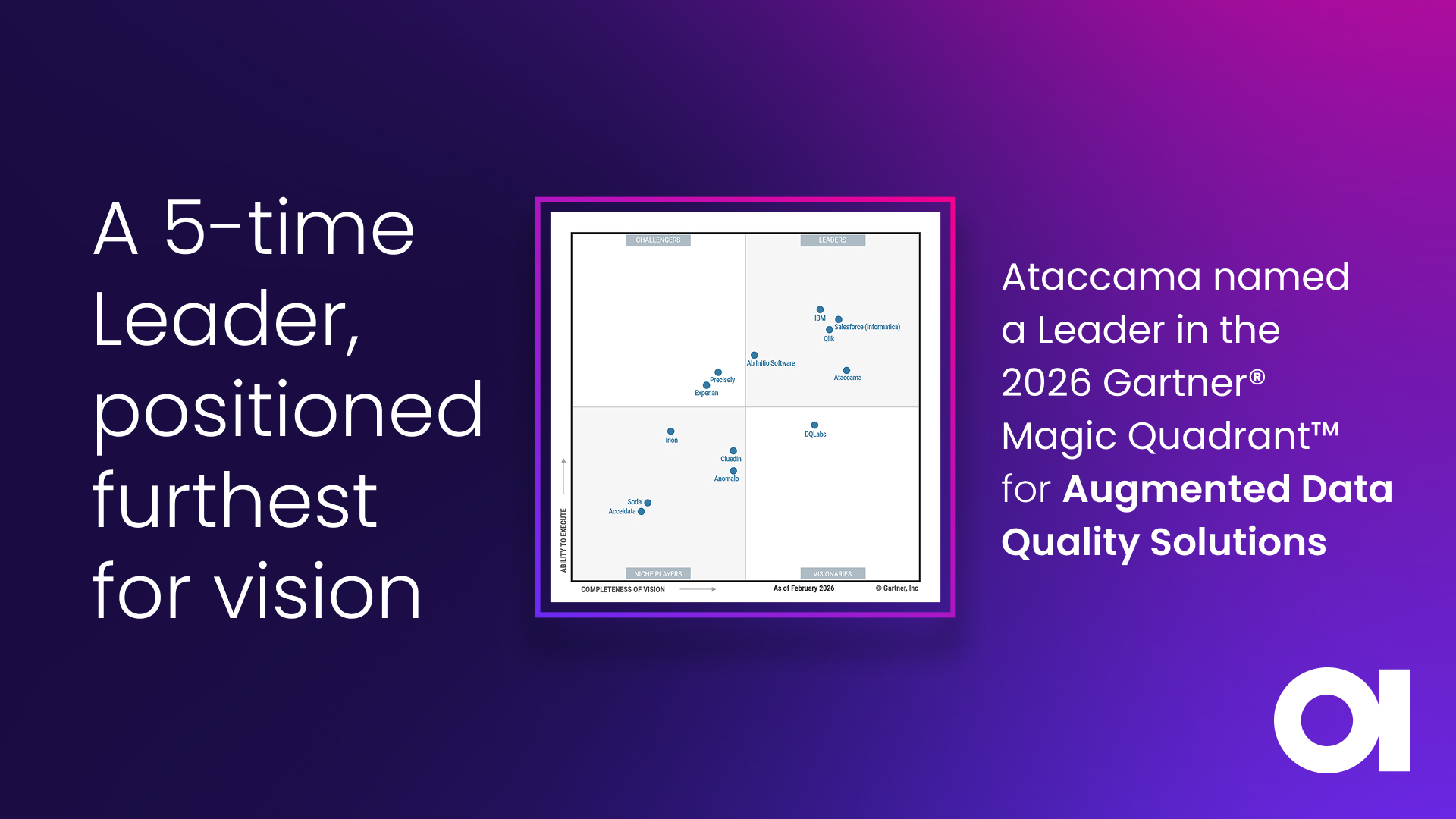

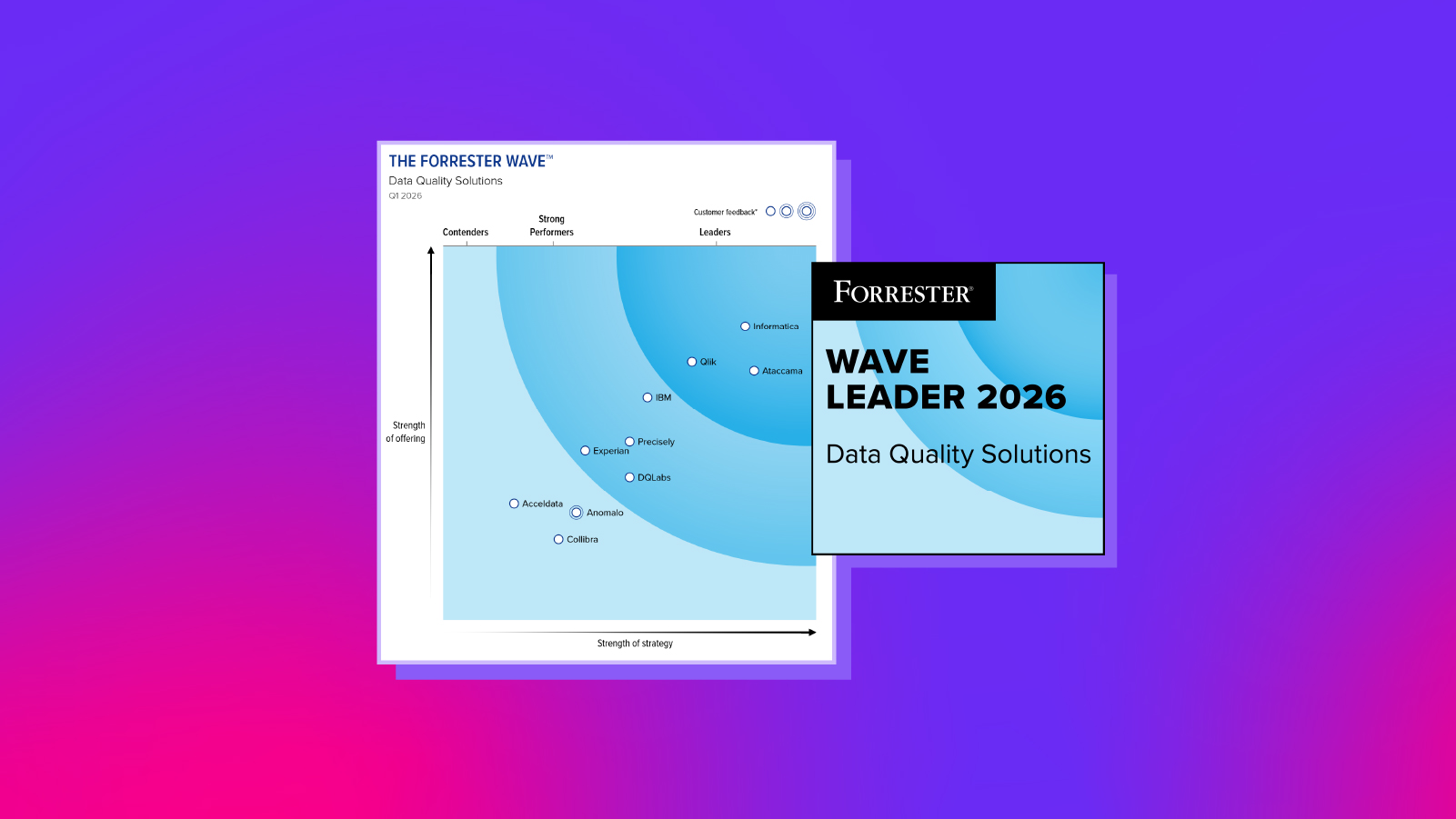

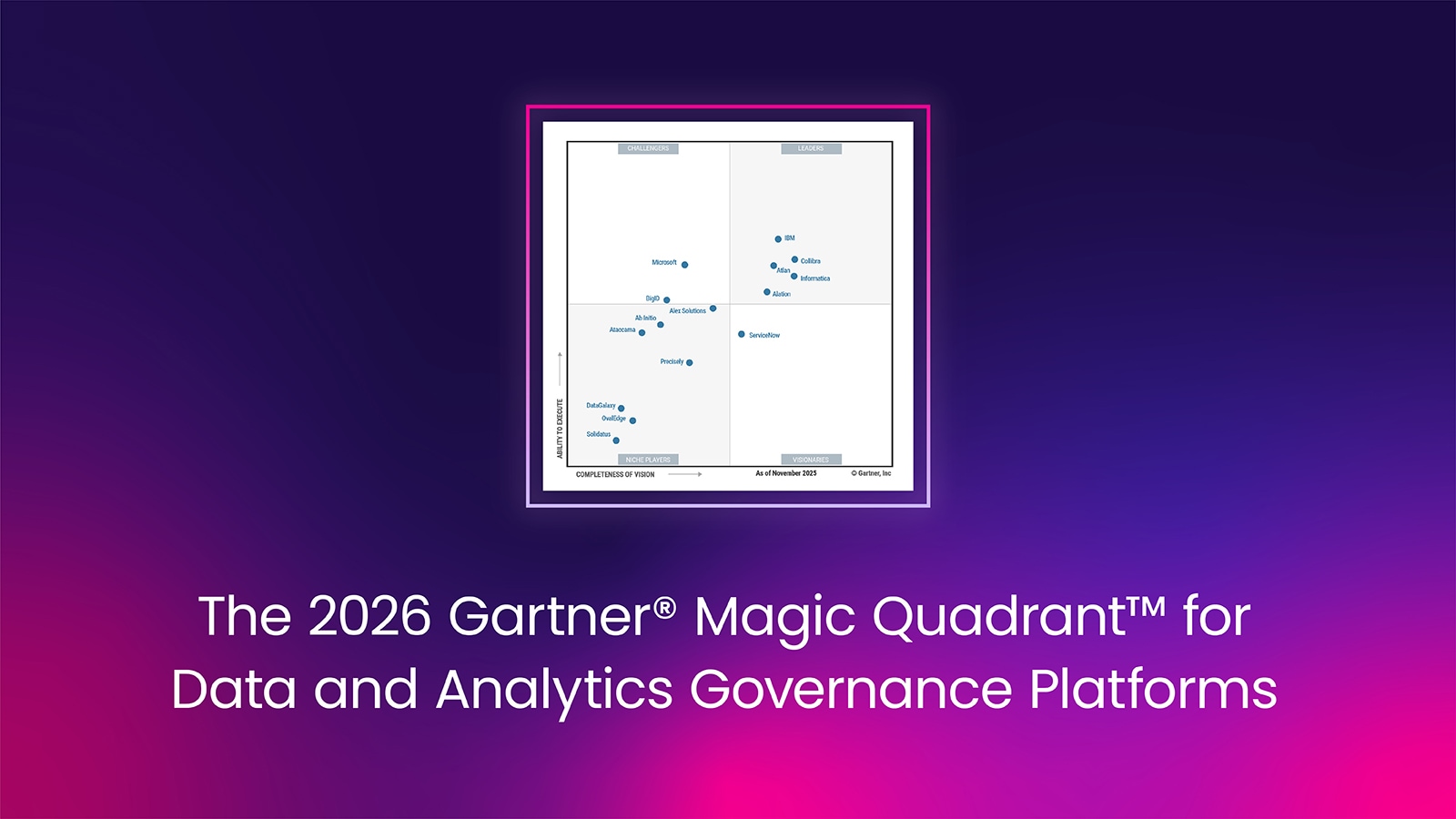

Tools like Ataccama ONE use AI-powered analysis to perform end-to-end data pipeline observability. Several tools on the market offer similar integrated solutions, like Talend and Informatica. Others, like Soda and Monte Carlo offer special monitoring tools, and still others offer more open-source libraries like Great Expectations.

There are also data governance tools available on the market, and they are a must-have for keeping an organization’s data operation running smoothly. These tools enforce your policies and track data throughout its lifecycle, focusing on increased accessibility and compliance.

Measuring success: Key metrics for data quality assurance

How do you measure success in data quality assurance? With a data quality tool like Ataccama, automated reports can be set to monitor things like accuracy, data completeness, lack of conflicts across systems, adherence to rules, and timeliness, as well as formatted for custom KPIs.

Tracking metrics across your data helps to ensure that your data continues to be trustworthy, and trustworthy data is a must for accurate decision-making.

Conclusion

Data quality assurance is essential to maintaining clean, usable, and trustworthy data. In training your entire organization to pay attention to data quality guidelines and implement thorough data governance policies, you’re ensuring that your company’s data will remain accurate and reliable when it matters most — which is every time you need to make a decision.

FAQs about data quality assurance

- What is data quality assurance?

- Data quality assurance is the proactive management of data from end-to-end of the data pipeline.

- Why is data quality assurance important for organizations?

- Data quality assurance becomes more and more important for organizations as the volume of data increases. Ensuring data quality means businesses have accurate, reliable, and consistent data with which they can make timely business decisions.

- What are the main steps in the data quality assurance process?

- The main steps in the data quality assurance process are data profiling and assessment, data cleansing and validation, data monitoring and reporting, and continuous improvement and data governance.

- How does data quality assurance differ from a data quality audit?

- Data quality assurance differs from a data quality audit because data quality assurance is a proactive approach to continually upgrading your data so that it remains usable and useful at all times. A data quality audit is a single capture of your data’s quality at a moment in time, without a process to enhance or upgrade that data.

- What are the key dimensions of data quality?

- The key dimensions of data quality are: accessibility, accuracy, completeness, consistency, precision, relevance, timeliness, uniqueness, and validity.

- What tools or software support data quality assurance?

- Commercial software solutions like Ataccama, Informatica, and Oracle EDQ (among others) help companies support their data quality assurance program. Using A.I- driven models, Ataccama gives companies the tools they need to effortlessly manage their data quality assurance across their enterprise.

- Who is responsible for maintaining data quality assurance?

- Data quality assurance is everyone’s responsibility. From front-line employees entering data into the system, to data engineers and data analysts managing and assessing the data, to the Chief Data Officer using the data to drive the business forward, everything in an organization can and should work together to maintain data quality.

- How do you measure success in data quality assurance?

- Measuring success in data quality assurance depends upon identifying your organizations key KPIs, enacting and enforcing data governance policies, assigning data-specific roles responsible for the data quality management, and adopting a company-wide attitude toward data quality and data stewardship.

- What challenges do teams face when implementing data quality assurance?

- Data quality teams face many challenges, such as data silos, resource constraints, and the complexity of continually evolving data sets that continue to grow. In addition to issues with the data itself, data quality teams often face resistance to changes across the company culture in handling data, and poor data governance or lack of data ownership can cause frustration among data quality assurance teams.

- How can automation improve data quality assurance?

- Automation can improve data quality assurance by continuously monitoring data as it flows from input to report, flagging errors and inconsistencies, and providing root-cause analysis and data lineage reports to ensure errors are corrected at the source, not just patched up down the line.

David Lazar

David is the Head of Digital Marketing at Ataccama, bringing eight years of experience in the data industry, including his time at Instarea, a data monetization company within the Adastra Group. He holds an MSc. from the University of Glasgow and is passionate about technology and helping businesses unlock the full potential of their data.