Ataccama ONE Gen2: Your Questions Answered

In November we announced Ataccama ONE Gen 2, our self-driving data management & governance platform.

We have been getting a lot of questions since then: about new features, differentiators, AI, partnerships & training, and many others. In this blog post, we are answering the most frequently asked ones grouped into categories.

If you have missed the launch keynote where Ataccama ONE Gen2 was announced, you can watch it here.

General Questions

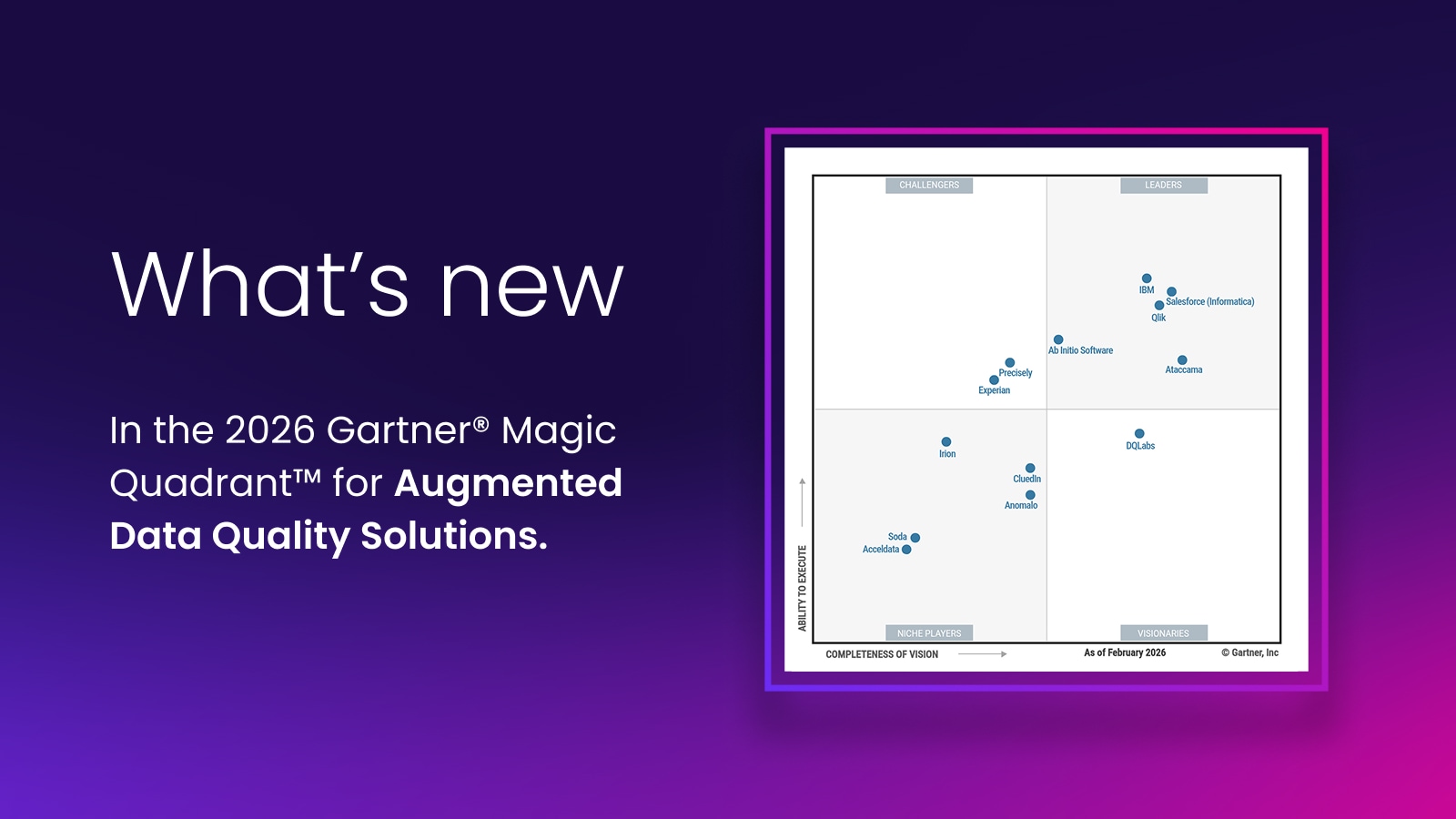

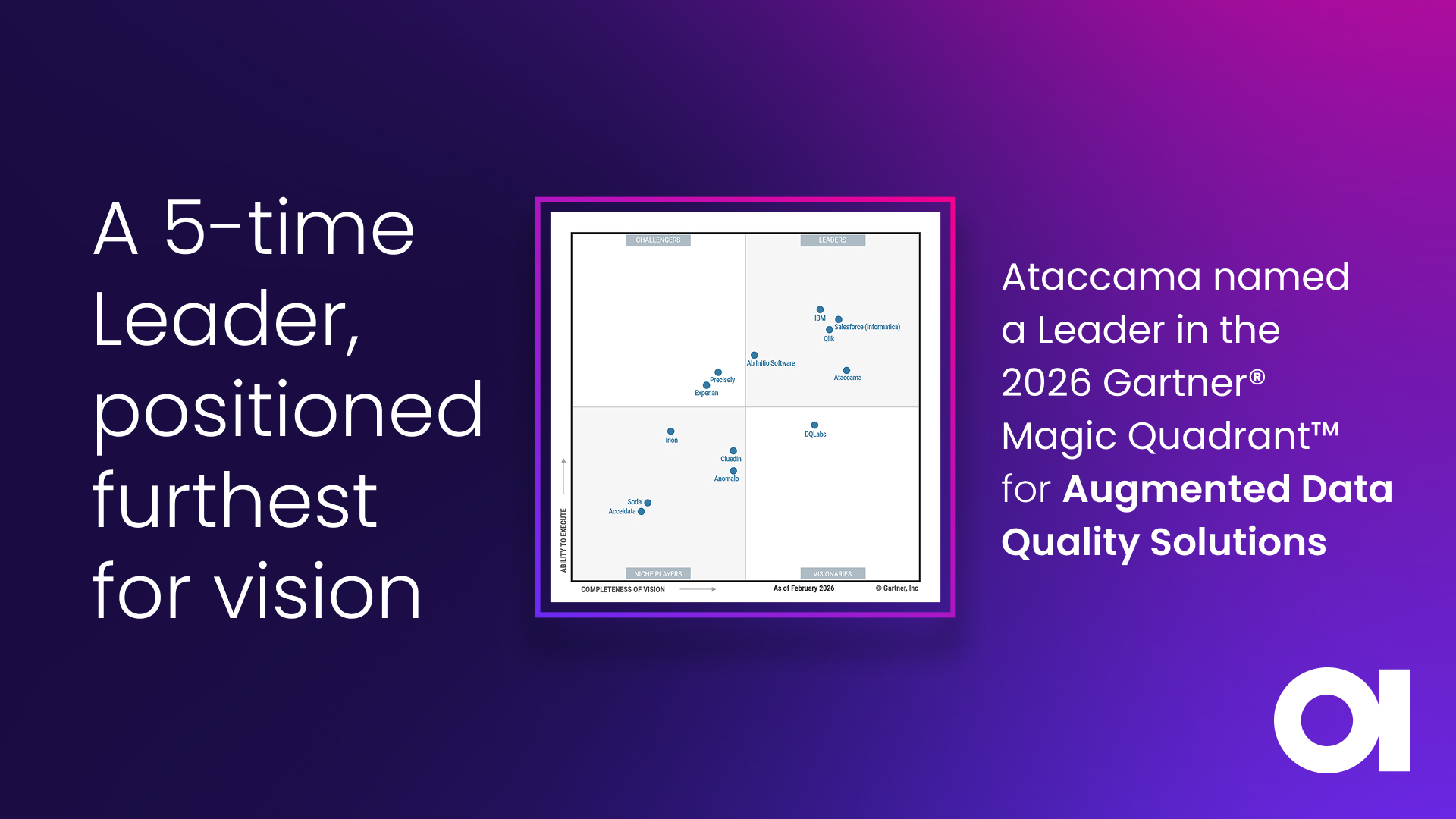

How does Ataccama differentiate itself from other data and metadata management platforms?

Ataccama ONE combines metadata management and data processing. Unlike most pure metadata management solutions, Ataccama usually starts with the actual data. We analyze not only structures but actual content. This enables us to find hidden relationships, patterns, and anomalies in the dataset that would not be captured in traditional metadata management platforms.

Learn more about the Ataccama ONE platform.

How do you make the concept of MDM land with business users?

We believe that even though the technical aspects of MDM are alien to many business users, the core idea of having a single version of the truth available and trustworthy with all the relevant information in one place is something most business users expect. We try to provide this experience to the end user without the need for them to dive into technical details or have the help of a more technical colleague.

Even though we are automating a lot of the technical aspects of MDM, we’ve maintained the option for experienced technical users to configure low-level details of the MDM hub. Automation simplifies and speeds up configuration but does not limit the user.

Learn more about how we automate Master Data Management.

How has Ataccama moved towards becoming a more business-oriented tool as opposed to a development-oriented tool?

We believe that both approaches make sense and are complementary. Ataccama ONE Gen2 brings better integration between ONE Desktop and ONE Webapp. This means that ONE metadata is available and shared across both options. We try to bring more easy-to-use options to the web front end, but keep the doors open for power users with the desktop environment.

The desktop environment can now be used to create virtual catalog items (i.e., views derived from other catalog items), rules, exports, or custom integrations. These components can then be documented in the catalog and transparently used by business users.

Data governance is a combination of people, process, and technology. How did you take the human factor into account for Gen2?

This is a good point and it is one of the reasons why we start documenting data by looking at the data. The automated process can discover hidden facts, spot anomalies, and find relations that would otherwise stay hidden. We can also notify users about unexpected changes that are often caused by human factors. The combination of automation and transparency—giving the end user as much information about the data as possible—helps both minimize the negative impact of the human factor and maximizes its potential.

What are some collaboration and social governance features in the new platform?

At the moment, there are multiple ways the data can get labeled:

- Manually by the end user

- Automatically by a rule

- Automatically by AI based on similarity with other labeled items

- Automatically by profiling logic (found patterns, outliers,…)

- Automatically by DQ anomalies (data is different than it was before)

- Automatically by a DQ rule based on other labels. For example, AI might find data that is similar to something else that was labeled before. This will automatically show the data quality of a catalog item

This means that even a small amount of user input (labeling some datasets) can lead to a vast amount of information available to the end users (via AI automation).

Additional social governance features like user ratings and feedback are on the roadmap.

Partnerships, Training, and Implementation

Do you plan to start Ataccama University?

Ataccama already offers a variety of training options, from self-paced tutorials to instructor-led labs. The training options cover all relevant platform users—from business data stewards to technical data stewards to DevOps—and provides learning paths from novice to expert users.

What does partner enablement look like?

Partnerships are of critical importance for Ataccama. We build long-term, prosperous partnerships with consultancies, system integrators, and technology vendors, and offer a wide set of enablement training, documentation, as well as technical, marketing and go-to-market support, and artifacts for each of the partnership levels. Please contact us at partners@ataccama.com if you would like to become a partner of Ataccama.

What is your implementation model? Do you have local system integrators or do we directly deal with Ataccama?

Our customers may choose to engage Ataccama professional services for the solution implementation, or cooperate with one of Ataccama’s certified partners in the Americas, EMEA, and APAC. The list of our partners can be found at www.ataccama.com/partners.

Data Catalog

Is the data catalog just a reference to metadata? Does the AI platform define these schemas?

Our data catalog collects metadata from multiple sources. It captures metadata from data sources directly, imports it from other metadata tools, and generates some metadata using statistical analysis and AI (including relations, domains, etc). It also offers a collaborative interface to curate metadata manually if needed.

Data Lineage

Aggregation of disparate data: when we receive the data will we know where it came from, how it’s been modified, or whether it’s fit for the purpose intended?

In general, yes, but the completeness of this information may depend on the particular source. If the data source is, for example, a file on an FTP server, we automatically know just that. Through our partnership with Manta, we can also parse a more complete data lineage from existing scripts, ETL tools, etc. For example, if we let Manta analyze some ETL jobs, we may discover that the file was created via an export from a certain database and then use this information within Ataccama ONE.

As for whether it is fit to purpose, Ataccama ONE tries to display as much information about data as possible. Apart from technical metadata and data lineage, we will also automatically evaluate any relevant data quality rules and look for anomalies and outliers in the dataset. This gives the user additional information to use and to decide whether the dataset is fit for the purpose intended.

How does Ataccama ONE internal lineage integrate with Manta lineage?

Ataccama ONE lineage includes information either gathered by our internal processes or imported via APIs. Manta parses external ETL tools, reporting tools, database scripts, etc. to add a broader picture automatically.

Policies Module

Do policies in the data catalog integrate with the depersonalization & anonymization logic in MDM?

Yes, one of the roadmap goals for our policy module is to allow fully automated application of depersonalization and anonymization logic for data consumption. This is not limited to our MDM, but any data access through Ataccama interfaces.

Is the new policy management functionality included in the data catalog license or is it a separate module?

Policy Management is a new, additional module in the Ataccama ONE offering.

Does the privacy module address Australian Privacy Requirements (which could be different to GDPR)?

The privacy module is generic and has been successfully used not only for GDPR but other data privacy regulations around the world.

Data Quality and Reporting

Can Ataccama ONE produce a report to show compliance with business policies?

All of this information is available via APIs and can be exported in a desired format to any target database. Typically, we would configure a custom report in any standard reporting tool and just feed the data from Ataccama ONE there.

Can Ataccama ONE measure how effectively all data assets are monetized?

It is not a typical use case but yes, if you can define a set of rules that help us evaluate this metric, it can then be measured automatically as a custom data quality dimension in the tool and displayed in reports.

Does Ataccama ONE integrate in any way with an MLOps architecture, for example, to validate DQ before a model makes predictions based on new data?

Yes, the tool is well suited to MLOps use cases. It can be used either to just validate DQ and look for anomalies before the data is consumed (as part of some external process) or it can even orchestrate the whole data preparation pipeline, including data transformation, standardization, and enrichment.

AI and Automation

Can an internal data model be created from multiple sources (with differing structures) using AI? Is the data between source and internal model mapped using AI?

The metadata management part of our offering documents existing models. It can import the model from multiple sources, and it uses AI to find additional information, including similarities, mappings, etc.

In the Master Data Management part of our offering, the actual need for modeling arises. The internal data model can be created from multiple sources with differing structures. The AI helps with the creation of the model by providing the relevant extra information. Automated data mapping between sources and the internal model using AI is a work in progress and will be added in the future.

Is the ML or AI shared?

The algorithms are pre-trained to work out of the box to some extent, and then they keep improving on your particular datasets based on your particular usage patterns. We are currently working on ways to share certain knowledge between individual deployments. This will be available for on-premises as well as for the cloud, but would depend on a special contractual agreement. We will require your consent to take anonymized usage data from your environments and patch your models with other customers’ usage data to improve the performance of AI models.

Can the Anomaly Detection feature be used concurrently with PII features?

Yes, it can. The Ataccama ONE Policy management module is transparent for other use cases. If I set up a policy that the user is not allowed to see certain PII information, and later on the user is notified about an anomaly, the user will only see the details that are relevant but limited according to the policy.

Does the automated detection of outliers work in real time?

Out of the box, outlier detection works automatically for batch processing. It can also be configured to work with small batches and be evaluated, for example, every 5 minutes. Full support for streaming data in anomaly detection is on our roadmap.

Is automatic rule discovery on your roadmap?

Yes, automatic rule discovery is on the roadmap. At the moment, Ataccama ONE does automated rule mapping based on available metadata. We also have the ability to automatically detect outliers, patterns, and anomalies. In the future, we plan to use this information to suggest rules as well to improve the results and give more transparency. To give an example, if we find a certain common pattern for a particular attribute and suddenly there is an outlier, we can suggest a validation rule, or even a remediation rule based on this discovery.

Are self-learning algorithms for data quality available for both on premises and cloud deployment?

Yes, for both the on-premises and PaaS versions. The algorithms are pre-trained to work out of the box to some extent, and then they keep improving on your particular datasets based on your usage patterns.

Deployment

Do you recommend having multiple environments for workflow & rule development, testing, and moving them into production?

We do recommend having multiple environments for testing configuration changes. However, we also recommend using just a single environment for content creation. There is a concept of logical environments in the application that allows you to catalog both production and non-production data with all their relations and separate them only by permissions and policies.

The rule can be tested on non-production data and then promoted to a production logical environment with a single click, as long as it’s done by someone with a sufficient level of permissions.

There are also separate data processing engine instances that can be dedicated to a given environment, so data does not need to cross environment boundaries.

This shared metadata platform that has access to both production and non-production metadata allows you to work more efficiently. Production users may see that there is a change planned but not finished yet. Development users will be informed of any metadata changes in production (usage, anomalies, profiles, etc.), if they have the right permissions.

Can you deploy Ataccama ONE on Microsoft Azure?

Yes, Microsoft Azure is one of our most common cloud deployment platforms.

Does Ataccama work on AWS GovCloud or Azure Government?

Yes, Ataccama ONE is not restricted to any specific services or their configuration. It requires standard virtual machines and a database server, which are available in both services.

Is Ataccama ONE compatible with a company’s existing infrastructure?

Yes, Ataccama ONE is not restricted to a specific infrastructure.

Architecture and Integration

How is data integrated between on-prem and cloud environments?

Ataccama ONE supports hybrid deployment. The core platform and metadata repository are installed in a single location, and for this discussion, we can say it’s in a cloud environment. Data processing runs on a component called the Data Processing Engine, which can be installed in multiple instances that may be in different zones.

Each processing job is then directed at one of the engines that actually has access to the data. If it is possible to get access to data directly from the cloud, then a central pool of processing engines can be used. If this is not possible because of security or performance concerns, one of the engines can be installed closer to the data: on premises or in another cloud.

Does Ataccama ONE work with Financial messaging standards like FIX, FpML, SWIFT MT, or ISO 20022?

Ataccama ONE can be configured to work with virtually any data source through the Ataccama ONE Desktop interface.

Can you import industry reference models such as TMForum or BIAN?

We can import existing models from standard formats, including XMI, a standard for the exchange of metadata. If the data is in an incompatible format, we can also transform it in our Ataccama ONE Desktop first to comply with our internal APIs.

That said, we prefer to derive metadata from the data itself.

How many connectors do you currently provide? Is it possible to bring or develop our own?

Ataccama ONE supports many different data connectors:

- File systems (local, S3, ADLS, HDFS), including all sorts of standard file formats (CSV, fixed width, JSON, XML, Parquet, Avro, Excel, and more)

- All common databases with available JDBC drivers

- Any big data sources available through a Spark cluster

- Proprietary connectors through our partnership with Information Builders

- Custom connectors through our generic API integration option

- Custom connectors through Java development

For some sources, we are not able to package the relevant driver directly with the tool because of licensing conflicts—in this case, you need to provide the drivers on your own. In the same way, you can switch to a different version of the driver if needed.

Can Ataccama ONE Gen2 synchronize business rules and metadata with legacy tools?

Everything we can do in Ataccama ONE is available via APIs. There is also a powerful desktop client for more sophisticated integration needs. This means we can use the Desktop environment to read metadata from legacy tools and use the APIs to populate Ataccama ONE structures.

Does Ataccama ONE automate the connection of data from external sources?

Ataccama ONE requires the definition of an external data source. This means someone needs to type in the connection details, such as the location of the database or file server, the credentials to be used to connect to it, etc. The process can be automated via APIs if there is a large number of sources that are already documented in a machine-readable format.

Can you explain how to use your platform when the majority of data is unstructured?

Ataccama ONE works best with structured data. There is also some support for unstructured text data, including, for example, finding named entities, classification, and parsing—ways to get some structured information from the unstructured text. We can also measure basic metrics, document the unstructured data, and find similarities in it.

As for other data formats, Ataccama ONE is not able to handle any audio-visual or in general binary data formats. In the case of proprietary formats, if it is text-based, we can either parse it via our Generic Data Reader (suitable for legacy files with COBOL/copybook-like grammar as an example). It is also relatively easy to add a custom integration in this case.

Can Ataccama ONE connect to Salesforce and analyze data from objects? Can I reconcile that with data from Microsoft SQL Server?

Yes, Ataccama ONE can connect to multiple source systems, including Salesforce and Microsoft SQL Server, and can reconcile data between them.

What are the Ataccama DQM platform plans for integrating with specific data validation services (e.g., Experian’s email validation service)?

Integration with third-party validation services is possible in Ataccama ONE DQM. Out of the box, we have prepared components for validation against Loqate validation services. Other providers can be added through generic web service integration options.

How does Ataccama ONE handle transactional/telematic/IoT data with millions of records every day?

Ataccama ONE can handle transactional/telematic data. With high volume transactional data, we do recommend working only with incremental data, such as with the last daily partition or similar. Full profiling capabilities may not be necessary for such data, or it may be run sporadically to limit the compute complexity. None of this prevents us from running all core functionalities, from discovery to regular rule evaluation and data quality monitoring.

Ataccama ONE also supports pushdown to Spark, which can be used to easily scale up processing needs if needed.

Performance/Non-functional/Upgrade

Does automated cataloging use a lot of resources?

It depends on the configuration. Automated discovery first loads the existing technical metadata, then it can start a sample profiling, reading just a fraction of the dataset. For big data sources, we can also read just the last partition of the data and we can also push down the profiling logic to a spark cluster if available. This limits resource usage to a bearable level even for cataloging of the largest data sources.

To get the maximum value, you may want to do full profiling on certain parts of your data catalog. You may also want to apply a more sophisticated logic, regular monitoring projects, and automated anomaly detection. This will of course require a bit more resources to work properly. All of this is fully scalable and you can decide yourself on the split—which sources will just be cataloged, and which you want to scan more thoroughly and/or monitor regularly.

Is it possible to upgrade our existing deployment of Ataccama ONE to Gen2? How difficult will the process be?

Yes, you can upgrade your existing deployment to Gen2. As Gen2 brings a substantial platform redesign, each upgrade will involve both automated migration scripts for the default parts of the platform and upgrade guides for the situations where the customer created custom processes.

We recommend infrastructure review as part of the upgrade process, though we do not anticipate the need for infrastructure changes in the majority of cases. Ataccama also offers professional services to help with more complex migration needs.

We are currently engaged in contract finalization to bring Ataccama into our organization for the first time. How will this upgrade impact those negotiations?

Your process is not impacted by the announcement.

When data owners interact with Ataccama ONE via API, is encryption guaranteed end-to-end?

Yes, data in motion is always encrypted. Keys/certificates can be provided by the customer.