7 essential data quality management capabilities

At the most rudimentary level, a data quality program is just evaluating the quality of your data based on some rules or parameters you’ve set in place. You can do this with one person perusing an Excel sheet, writing SQL code to find undesirable data, and then changing it manually. However, in an enterprise setting, it’s usually better to set a system in place that can automatically improve and maintain data.

Data quality management has evolved since it was the domain of IT, DBAs, and “data geeks.” It doesn’t have to take ages to do or require high-level technicians to maintain.

That’s why when we talk about the “essential” capabilities of data quality, we consider complete systems that streamline these processes by making them automated and user-friendly. The list below covers the capabilities that have become commonplace in mature data programs worldwide.

Data profiling

While data profiling is not traditionally part of data quality management, it can automate other essential data quality capabilities. Let’s start with defining what data profiling is. Data profiling is a process that examines your source data and extracts metadata and valuable information from it. It will tell you what formats your data points are in, identify duplicate entries, determine if records are complete, etc. On top of that, data discovery will also:

- Provide basic data quality information

- Add tables to your data catalog

- Detect data structure changes automatically

- Assign terms and categories from the business glossary

- Detect and evaluate data anomalies

You can start with data profiling by using it to evaluate whichever source data you’d like to understand better. For example, your app dev team would like to use a legacy data set for their project but is unsure of its content and quality.

However, data profiling can run automatically for all data sources of interest. This way, your catalog will always have up-to-date information about critical data.

If not already in place, data profiling should become a priority for you if you want to automate data quality processes because it will let you do the following:

- Catch data anomalies early

- Give business users an always up-to-date on data sets available in the data catalog.

- Will provide input for creating data quality rules.

Data profiling will set the stage for the rest of your DQ processes. It will give you the information to evaluate data sources and sets, determining which are reliable and which need data remediation.

Data remediation

Source data is rarely ready for use by end-users or downstream applications. To make this data fit for purpose, you need to make some corrections. This process is called data remediation and can be broken down into the following activities:

- Cleansing: Detecting, correcting, and sometimes removing undesirable data records.

- I.e., Finding a record that fails data validation and removing it from an analysis project.

- Parsing: Breaking data elements down into smaller components.

- I.e., a parsing algorithm could separate a single customer data record into several elements like address, zip code, first name, last name, etc.

- Transformation: Putting data from one format into another.

- I.e., Personal data: addresses, names, phones, etc. Very often, you need to standardize the formats of this data or correct misspellings.

- Enrichment: Using external and internal sources to improve and complete records from incomplete sources.

- I.e., you have customer names in your CRM. However, you need more information about them to provide better customer service and marketing – like headquarters, addresses, information on founders, etc. Procuring external reference data from someone like Dun & Bradstreet and enriching your CRM data with theirs is how you would do that.

IT, BI, and data management teams typically configure data remediation as an automated process. They integrate data sets to load into the data warehouse, database, and data lake. However, data analysts and scientists often prepare data manually for their projects and tasks.

Data remediation ensures that consuming processes receive the data in the format and quality that fits its purpose. Once it’s performed, you can better rely on the resulting projects from that data as you’ll know all of it met the specific requirements and parameters vital to your business.

Mastering and deduplication

Another potential data quality issue is duplicate data. These records contain information about the same entity with potentially conflicting data. Once you discover duplicate data (match new data against existing data), it can be merged (combining duplicate records into one) or discarded, depending on the use case.

Data matching and merging are usually done on your stored data (I.e., customer records) through rules, algorithms, metadata, and machine learning to help determine which records should be kept, combined, or deleted. Some scenarios where you will need to match and merge data include:

- Data Migration: Migrating duplicate data will only transfer the same problems from the previous set into the new one. In fact, the target system might reject this data automatically.

- Fraud Detection and AML: Detecting suspicious activity like two people with the same social security number or checking for matches in an external blacklist of financial criminals.

Beyond the examples above, duplicate data can lead to inaccurate reporting, skew your metrics, and make it difficult to decide which record can be trusted/is the most reliable. It can also lead to errors in machine learning and AI. You can use a mastering solution once you have advanced matching and merging capabilities. In this hub, you store your matched/merged data or “golden/master records,” so you always know your most important information is valid and complete. This is called a master data management solution.

Data validation

Data validation is what ensures the validity of your data against certain criteria. It will check them against any business rules or industry standards you need. This can come in many shapes and sizes. You might need to verify email addresses to see if they’re real and can receive mail or verify geographical addresses with a solution provider like Loqate.

Like most of these processes, data validation can be done manually on sets you already have stored or automatically on all incoming data. Automatic data validation will automatically flag all outliers or extreme and unwanted values.

For example, suppose you are a medical company conducting clinical trials and know that all daily dose values will be between 0 and 3. In that case, you can integrate a web service that will check these values when a medical worker enters them. This will prevent data outside these parameters from getting into the system. You can also validate data sets on demand whose accuracy you aren’t sure of, like from third-party data vendors.

Rule implementation and data quality firewall

Data quality rules achieve a big chunk of data quality monitoring and enforcement. They define what your company sees as good and bad data from here on out and ensure that all the data you intend to use falls into the category of “good.”

Every data quality solution needs a user-friendly way to manage and implement rules for validation, cleansing, and transformation purposes. Ideally, this is a collaborative environment where business and technical users can define and implement these rules. If you’re unsure how to develop DQ rules, you can learn more about it in our How to get started with data quality article.

Your data quality rules will be stored in your central rule library. From there, they can be applied automated or on demand. Your central rule library will also be where data experts can implement new rules, change or remove old ones, and approve/decline these changes.

Once these rules are in place, they will become your data quality firewall, a barrier between dirty data and your downstream systems. It will check all incoming data against your rule library and verify it automatically, flagging inadequate entries. After it’s fully functional, you will have real-time data validation and real-time data transformation, so anything that gets past the firewall will be high quality and fully trustworthy.

Issue resolution workflow

While systems like data validation, data preparation, and matching and merging can work automatically, you need a way to manage exceptions. The system you can use for this is called an issue resolution workflow. You can see an example of one below.

This tool helps data stewards and users identify, quarantine, assign, escalate, resolve, and monitor data quality issues as soon as they occur. For example, customers might call you and say they aren’t getting their monthly newsletter. Whoever receives that complaint can pass the information to a data steward, who can log and handle it.

Data quality monitoring

After all your data quality systems are in place, the last step is maintaining data quality indefinitely. You’ll need to use the criteria set by your business rules and make an overall and consistent assessment of the quality of your data.

This is called data quality monitoring, a capability to crawl data sets looking specifically for quality issues. Once finished, it will give you a data quality report describing its completeness, if the desired formats are present/uniform, if all the data is valid, etc. You can also expect a tangible data quality score to set a numeric value for the quality.

Data quality monitoring should happen constantly on all incoming data. It should also be performed regularly on your stored data to maintain its integrity (how regularly is up to you). If you suddenly see a drop in data quality, you can begin an investigation to find where the problem is coming from and stop it.

Losing track of your company’s data quality can negate all the processes you worked so hard to implement. Without consistent monitoring, you would be surprised how quickly your data quality can degrade. It’s better to maintain than to rebuild from scratch.

Data observability

Once you set up data quality monitoring, you will need a way to automate it and detect data issues you didn’t predict/weren’t aware of. You can achieve this via data observability, a comprehensive and fully automated view of your company’s data.

Data observability can help with issue prevention via machine learning and pattern recognition processes like anomaly detection. It will actively scan your datasets, identify inconsistencies and outliers, and notify data owners and stakeholders. With data observability, data quality becomes proactive instead of reactive.

Conclusion

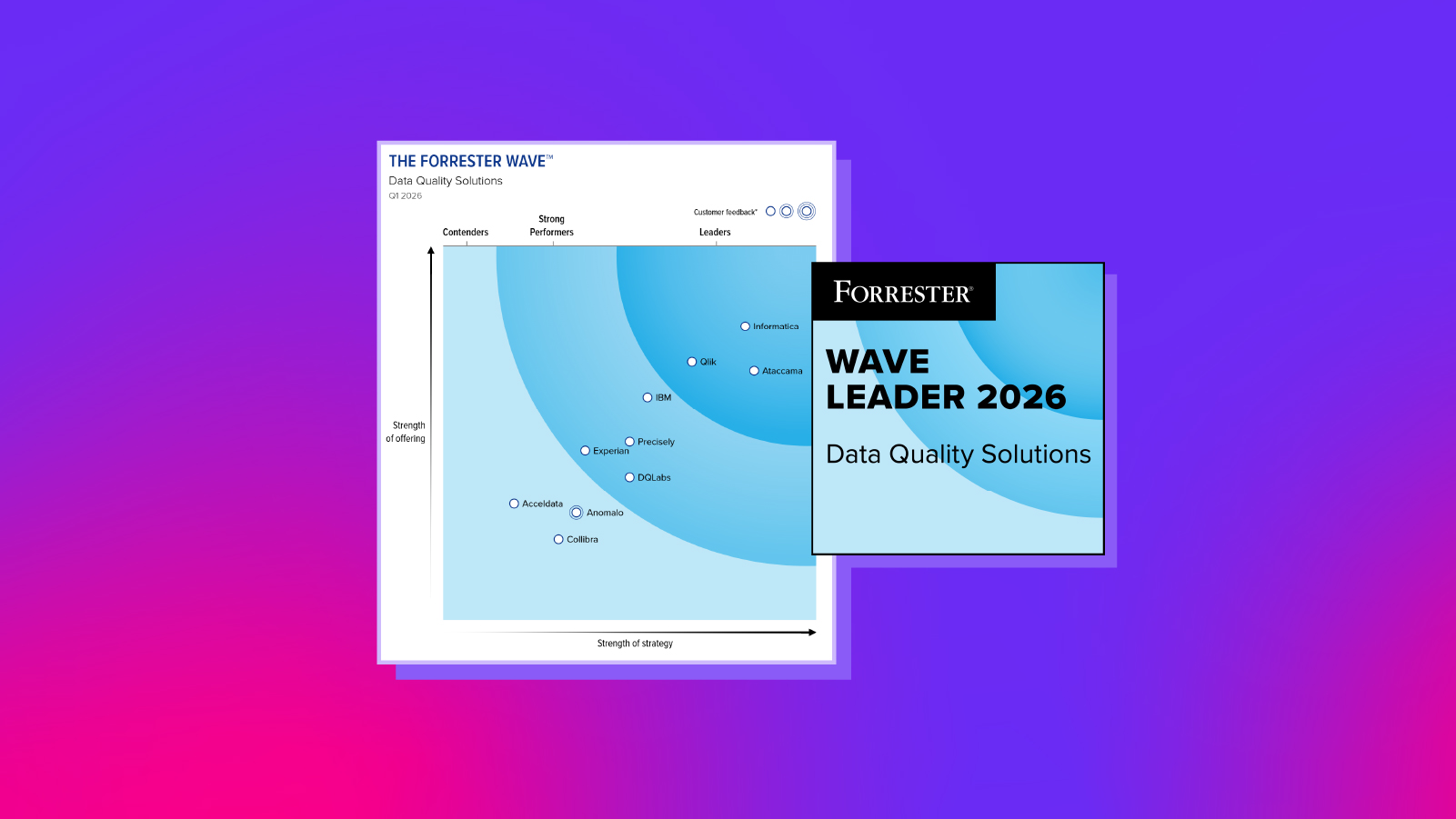

Look no further if you’re looking for all these capabilities in one solution. Ataccama ONE has everything you need from a data management tool. Check out our platform page!