How to get started with data quality

If you’re reading this, then your company might be experiencing some of the common symptoms of a poor data quality framework. Maybe it takes you an excessive amount of time to prepare data, or customers and business users are complaining about inefficiencies or gaps in information; perhaps staff members aren’t trusting the data they’re working with. Either way, you’ve come to the same conclusion: it’s time to start with data quality management.

In our experience, these are the three things you should do first:

- Determine your current goals and scope

- Profile data

- Start a data quality program: Improve data and monitor data quality

Taking these first few initial and important steps will give you a chance to provide value fast, get more stakeholders behind you, and secure funding in the future.

1. Determine your current goals and scope

Before beginning with a DQ initiative, the first question you need to ask yourself is, “what am I trying to achieve right now?” The reasoning behind your initiative will directly impact the scope and scale of the processes you set in place.

To stay focused, follow this reasoning:

- Determine what business process you are trying to fix.

- Understand what data sources contribute to that business process.

- Identify critical data elements (CDEs) within those data sources and specific data sets.

Set the scope of your initiative

There are two main types of data quality framework: tactical and strategic.

If you’re starting with DQ for tactical reasons, you probably have a specific issue that you’re trying to fix. For example, maybe the marketing department has reported that 50% of newsletter emails aren’t reaching clients or declining campaign performance. The reason could be that some process has affected customer data.

On the other hand, a strategic initiative will focus on building systems to achieve enterprise-wide higher quality data. In addition to fixing urgent data quality problems, it will result in a data quality framework for preventing the deterioration of data quality.

Focus your effort on specific data

Whether your focus is tactical or strategic, you will need to determine which data you want to focus on initially. While it’s possible to begin an enterprise-wide initiative for all of your data, it might be easier and more efficient to pick the most valuable and essential data at your company and start there. This data is typically referred to as a critical data element (CDE).

In the case of our marketing department troubles, CDEs would probably be first and last names, email addresses, demographic attributes, and data on their purchases and addresses.

However, data landscapes are so diverse from company to company it’s hard to make generalizations about universal CDEs for every company. It depends on the data consumer, the type of users, and who will have access to the data. For example, a data scientist working for the marketing team at a bank can have entirely different data priorities to someone on the product development team.

How to identify critical data elements

If you want help determining your company’s CDE’s, try the following ideas:

- Determine if CDEs have already been identified. You might already have data elements labeled as CDEs. If your company has a central data catalog, then finding them should be relatively easy. In the opposite case, you might be in for a lot of leg work gathering information about data sources, models, specifications, building reports, etc, to find your CDEs. Having readily available data lineage would help here too.

- Identify key business/reporting requirements. Another great way to find your CDEs is by determining what regular reports your organization generates. You can talk to business users and middle management to find what these are. For example, maybe your business needs regular updates on the number of new accounts per region, attributes of the source data used to determine this number could be considered a CDE.

2. Profile data

Now that you’ve found your company’s CDEs it is time to take a look at what data is being stored in the individual CDEs. You could look at the sources containing CDEs with a naked eye, but it would take you ages to dig through the source databases, files, and data lakes directly. By utilizing a data profiling tool, you can spare yourself time and effort. By the way, data profilers are mostly free.

Data profiling is a process that recognizes:

- Data domains (customer data etc)

- Data format and patterns

- Data value distributions and abnormalities

- Completeness of the data

- Common data errors such as duplicate and empty values

Here is an excerpt of data profiling results:

After receiving this information you’ll have basic data quality insights into your CDEs. Let’s say your marketing department is curious why their email campaign isn’t reaching customers. After profiling several data extracts, you might find many email addresses in invalid formats. You might also find out that 30% of your customer records don’t have an associated email address.

These findings suggest that:

- Source email data needs to be validated before it enters your business systems and cleansed before it reaches the marketing department.

- Data source owners need to investigate why 25% of email addresses are missing.

Data profiling can also help you determine the strength of your data sources. After profiling several data sets from one source, if errors keep showing up, you can take proactive measures to prevent them in the future.

Data profiling: the bottom line

At this point, without any monetary investment, you have already identified some sources of data quality problems for a specific business process or your organization in general. You have also identified ways to improve data quality and showed why it’s important. This is a great way to show value early and establish trust in what you’re doing to get buy-in.

3. Start a data quality program: Improve data and monitor data quality

Now that you have prioritized your critical data and understood the main issues with it, you need to do two things:

- Fix the most urgent issues as soon as possible

- Come up with metrics and methods for measuring its quality.

- Monitor data quality problems

Fix what can be fixed now

At this stage, you can begin with some of the most common DQ actions on a data set like cleansing, transforming, or standardizing the data. A quick and easy solution could be using SQL, Excel, or Python to cleanse a target data set or even some source data. However, if you look to scale and re-use your work, you need a proper data quality solution.

Modern data quality software can automate a lot of work for you: from data profiling and finding issues with data to monitoring data quality and preventing bad data from entering your systems in the first place.

Create metrics and data quality rules

To maximize the effort you’ve put in, you need to think long-term and make data quality management a permanent part of business processes in your organization. One thing you can’t do without in this endeavor is data quality rules.

What are data quality rules? These are conditions that you want your data to satisfy. For example, in the case of email addresses, you might want to create several rules:

- Rule 1 will check their format and structure, and whether it’s not empty

- Rule 2 will check the domain against an existing list of allowed domains

Modern DQ tools can also detect problems with your data without rules by detecting anomalies and outliers in the data. They can also suggest rules. However, if you don’t have this kind of solution, you will need to develop rules. There are two great sources for DQ rule creation: your insights from data profiling and data consumers.

Use your findings from data profiling

You can translate what you learn from profiling into DQ evaluation. Should it reveal problematic sources within the data pipeline that lead to DQ issues, you can create rules that will help prevent those issues in the future. If your external data sources are providing data in too many different formats, you can standardize entry options so that incorrect formats are impossible.

You can also use it to verify rules you’ve created elsewhere. Going back to our email campaign example, profiling could reveal how many of your source systems currently have their email listings formatted correctly, leading to you creating a rule or format to standardize all the entries.

Talk to data consumers and data experts

While data profiling is a very useful exercise, it will not reveal the requirements to data that users of data will. They know exactly how the data should be prepared for them to work with it. Additionally, IT engineers, data architects, and data engineers will tell you about requirements to data from a system integration perspective. You will learn which formats are required by which APIs and other requirements.

Use rules to monitor data quality

Now that you have created data quality rules, you can apply them to various data sources to monitor their data quality on a continuous basis. This way, you will always have an overview of the state of your data and be able to track the progress of your data quality efforts.

Here is how mapping data quality rules works in Ataccama ONE:

After you are done mapping data quality rules to your CDEs and other data attributes of choice, you can start monitoring data quality and getting regular reports

The future: scaling data quality management

You have done the first important steps to data quality and hopefully showed value to internal stakeholders. Use these next steps to expand the reach of your initiative and make data quality management pervasive in your organization.

Expand the project to other CDEs and source systems

The way you handle your CDE’s quality will set the stage for how to set up an enterprise-wide DQ initiative for all of your data. You can reuse a lot of the features you implemented for the CDE’s such as processes, stewards, some of the data rules, the configuration of monitoring projects, and data transformations so you don’t have to start from zero with new or remaining data sets.

Expanding your solution over time is worth it because you can discover valuable data sets you didn’t know you had because their data quality was so poor. Shifting your focus from tactical to strategic will reveal a plethora of benefits you might not have been aware of. It will also enrich the outputs of your data quality initiative beyond the target system or process you originally sought.

Create automated data processes

Set up the following data quality processes:

- Data validation: Check incoming data against business rules and prevent bad data from spreading to downstream systems.

- Data cleansing and transformation: Perform all the necessary transformations to ensure the data is fit for purpose. This will involve standardizing formats when joining data sets, deleting duplicate entries, etc.

- Data monitoring and reporting: Measure and track DQ issues report them to the appropriate parties.

- Issue remediation: A process for dealing with bad data once you discover it (i.e. transforming, cleansing, correcting it). You will also need to choose who is responsible for solving these issues, what should be done in each use case, what can be automated vs what needs to be handled by a data steward, who can change data and who cant, etc.

It’s important to automate these processes because then you can keep up with DQ in real-time instead of having to work retroactively when an error is flagged. This will save your users time in preparing data and also lead to faster and more reliable data analysis.

Data Quality Management with Ataccama

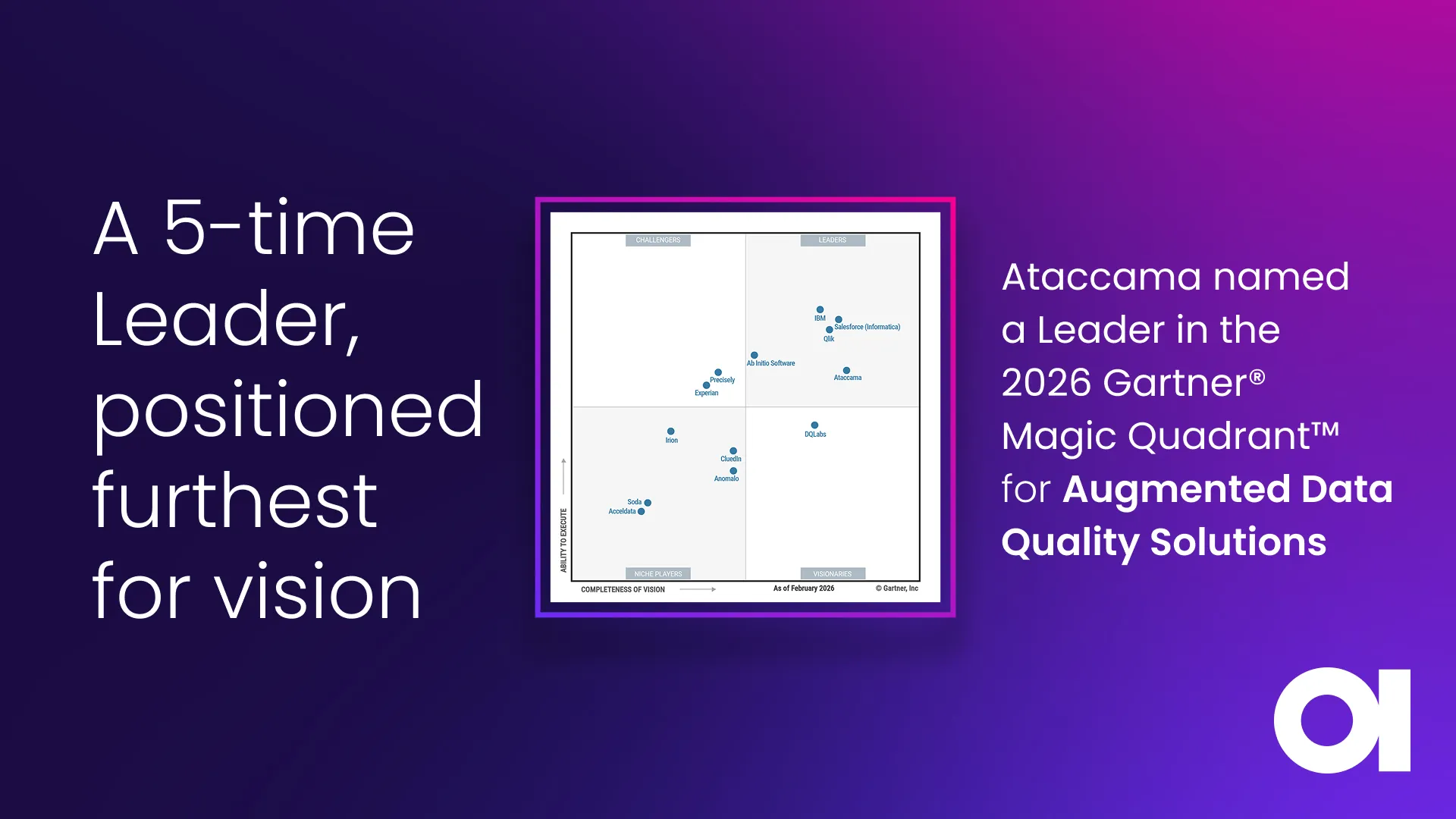

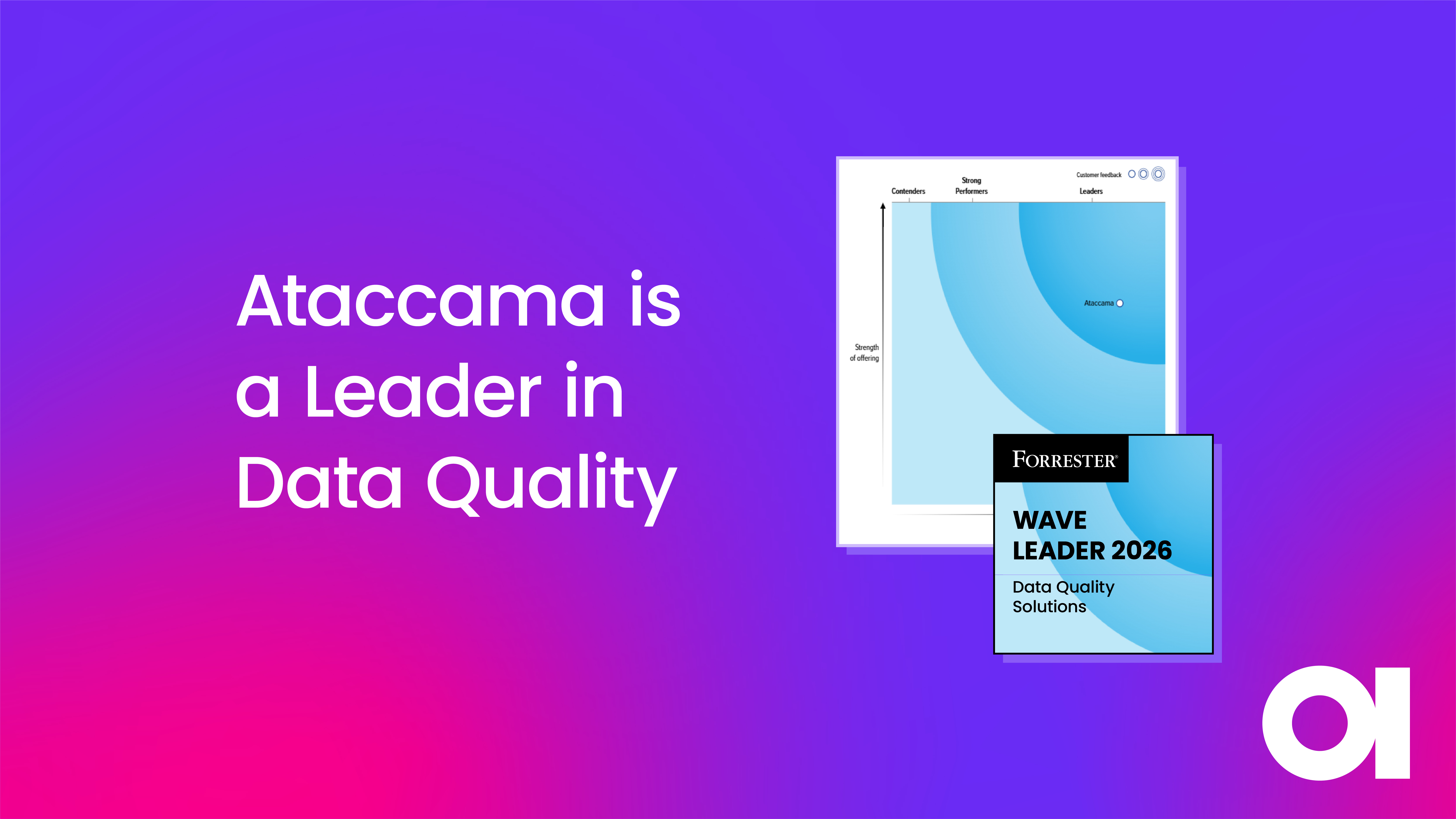

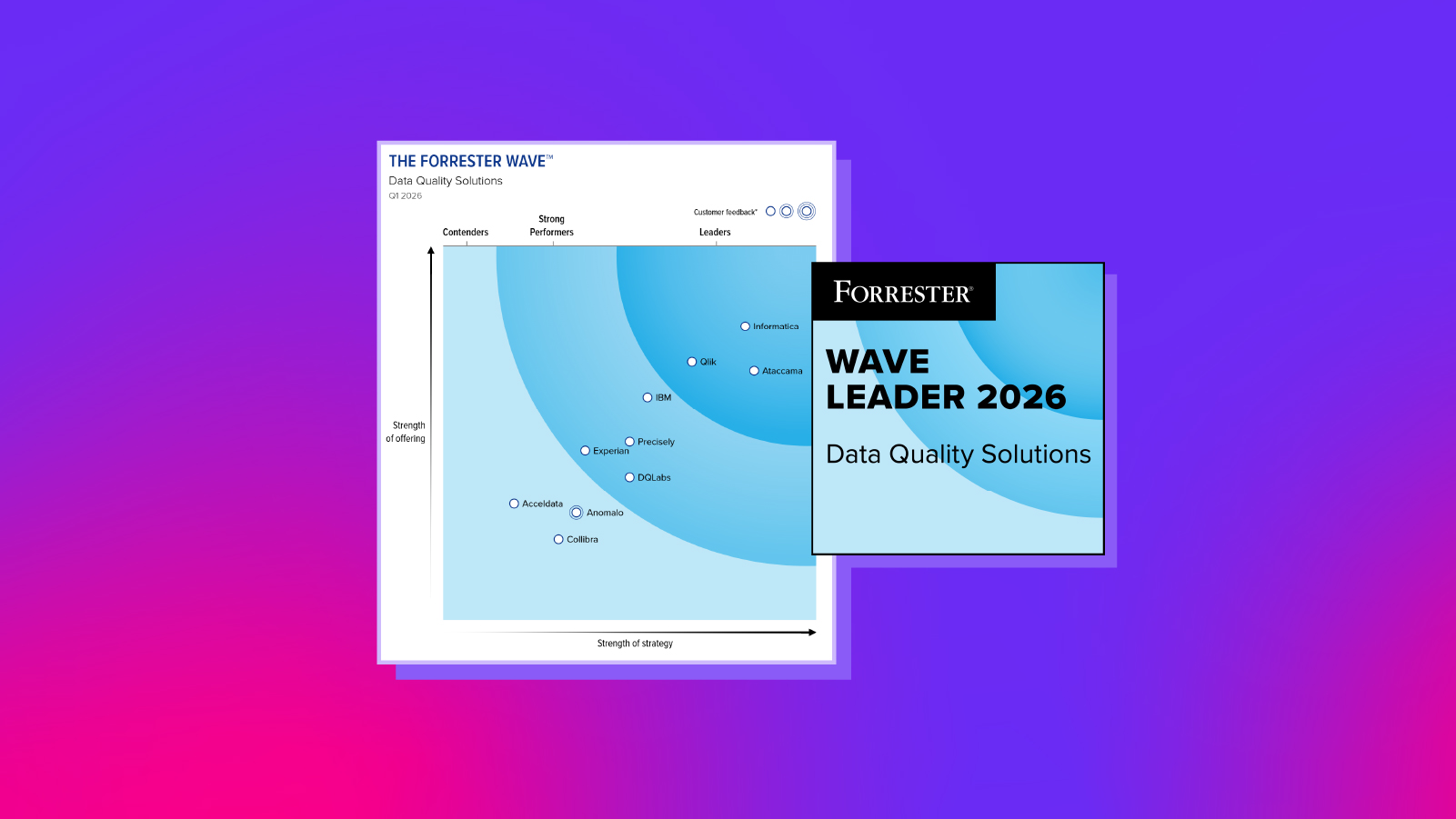

If you are looking for a flexible and user-friendly platform to roll out and scale data quality management in your organization, consider Ataccama. Not only do we have a market-leading data quality solution recognized by Gartner, but a dedicated and responsive ready to guide and help at every stage of your data quality journey.

Get started with data quality with Ataccama

Ataccama ONE powers enterprise data quality management solutions at T-Mobile, Varo Money, Daiichi Sankyo, Raiffeisen Bank, Fiserv, and many others.