E2E DQ: A Holistic Approach to Continuous DQ Management

Data quality monitoring (DQM) is the first step in the data quality lifecycle. It allows you to learn about the accuracy and reliability of your data. This level of trust and understanding helps avoid failed analytics projects, compliance issues, poor user experience, and other data-related issues. If you’d like to learn more about DQM, check out our previous blog post.

However, starting with DQM is just the first piece of the much larger data quality puzzle. After you’ve implemented a successful DQM process, there are still several tasks that you need to address before arriving at reliable, usable, high-quality data.

But how do you do this as a company? By implementing end-to-end data quality (E2E DQ). What are the most important steps to ensure the quality of your data is managed, end-to-end, in a streamlined and effective way? Read on to find out.

Step 1: Document and Define

Before you can begin assessing and improving data quality, you need a comprehensive understanding of all the data stored in your systems. You can do this by documenting all your available assets and defining the DQ metrics you want to focus on. These often include aspects like:

- Accuracy (the percentage of data values that match the expected or reference values),

- Completeness (percentage of records that have all the required fields populated),

- Consistency (percentage of data assets that meet the predefined rules)

- Freshness (frequency in which data is updated and good for consumption)

Clear and comprehensive documentation and definition outline the structure, meaning, and usage of data within an organization. This is best done by implementing a business glossary containing all the metadata and clearly standardizing definitions for each data element. Lineage, transformation rules, usage guidelines, and other DQ processes are also important. They are essential for maintaining DQ, consistency, and understanding of data across the organization.

Documenting and defining data also means creating detailed records that describe various aspects of the data, e.g., source, structure, ETL processes, and usage. This information serves as a reference guide for data users, analysts, and other stakeholders and ensures everyone knows how to interpret and use data correctly.

Step 2: Assess

Once you have documented your data and defined the business rules and DQ metrics, you need a way of keeping a pulse on your data systems. Data quality monitoring will help you understand your data by consistently assessing its quality based on your defined metrics. Once your monitoring tool uncovers an issue, you can begin taking steps to resolve it and improve the data. You can learn more about monitoring, why it’s essential, and how it works in our previous blog.

Step 3: Improving Data

Now that you’ve used DQM to uncover your data issues, it’s time to act. You can learn more about typical data quality issues and how to fix them in this blog. Unfortunately, this is where many DQ initiatives get stuck or encounter obstacles. Acting on the issues in a governed and auditable way at all times, ideally also in a single tool and single workflow, is not something that all tools are built for.

You can simplify this process by employing a tool that utilizes AI-driven capabilities. Once automation and AI assistance are implemented, you can avoid manual go-arounds and fixes, such as:

- Manually combing through large data sets to fix duplicates.

- Manually fixing incomplete information or inconsistent formats.

The tool can even suggest what and how data can be improved and include features like collaborative issue remediation. AI suggestions of this nature are possible thanks to the data quality rules you have already predefined. By having a tool that enables this, you give more power to business users (where appropriate, without creating compliance risks or more confusion), enable data democratization, and further promote a data-driven culture.

AI-assisted data improvement directly in a data catalog.

Additionally, having a tool that combines data quality and governance capabilities will save your engineers a lot of time. Data governance sets the stage for your DQ processes, determining how people should work with data, and providing a shared understanding of data so that everybody is aligned. By having tightly integrated governance and end-to-end data quality, you can better ensure that policies put into your governance protocols immediately apply to your data.

As your organization expands this access and results begin to speak for themselves, you’ll also see improvements in the trust of your data analytics projects.

Step 4: Preventing data issues

To build trust in data, you must proactively prevent data issues, not just fight fires (fix issues after they’ve already happened and caused damage). Data engineers get fed up with constantly fixing broken data and developing go-arounds instead of focusing on their core responsibilities. Some of the most prominent issues that can require a data steward’s or engineer’s attention are:

- incorrect data,

- broken ETL pipelines,

- invalid data.

A DQ firewall for issue prevention can be a great solution. It stops incorrect data from entering your company’s systems in the first place — no need to fix broken pipelines over and over again. A DQ firewall uses predefined rules to determine what data can and cannot enter downstream systems.

Example of a DQ firewall: a company’s internal application that utilizes a DQ firewall in its form, in the back end. This would prevent product data outside a certain price range from entering the data catalog.

Once you fully integrate the DQ firewall with your rules library, it will be able to prevent DQ issues specific to your use case, putting you in a good position to prevent problems from happening altogether.

Step 5: Extend and Scale

When building a data quality initiative, scalability is paramount. Systems are constantly evolving, and companies are always onboarding new ones. You need a plan that accommodates this volatile environment: a single, multi-purpose platform.

- A single, multi-purpose platform. You can also employ a data management platform that offers a singular workflow or all quality-related tasks. This approach increases efficiency (with less need to switch back and forth between apps) and streamlines maintenance efforts in the long term, becoming more cost-effective the longer you use it and the more systems you add.

If you want to see for yourself how a single, multi-purpose platform works, follow this link.

Let us help you go beyond data quality monitoring.

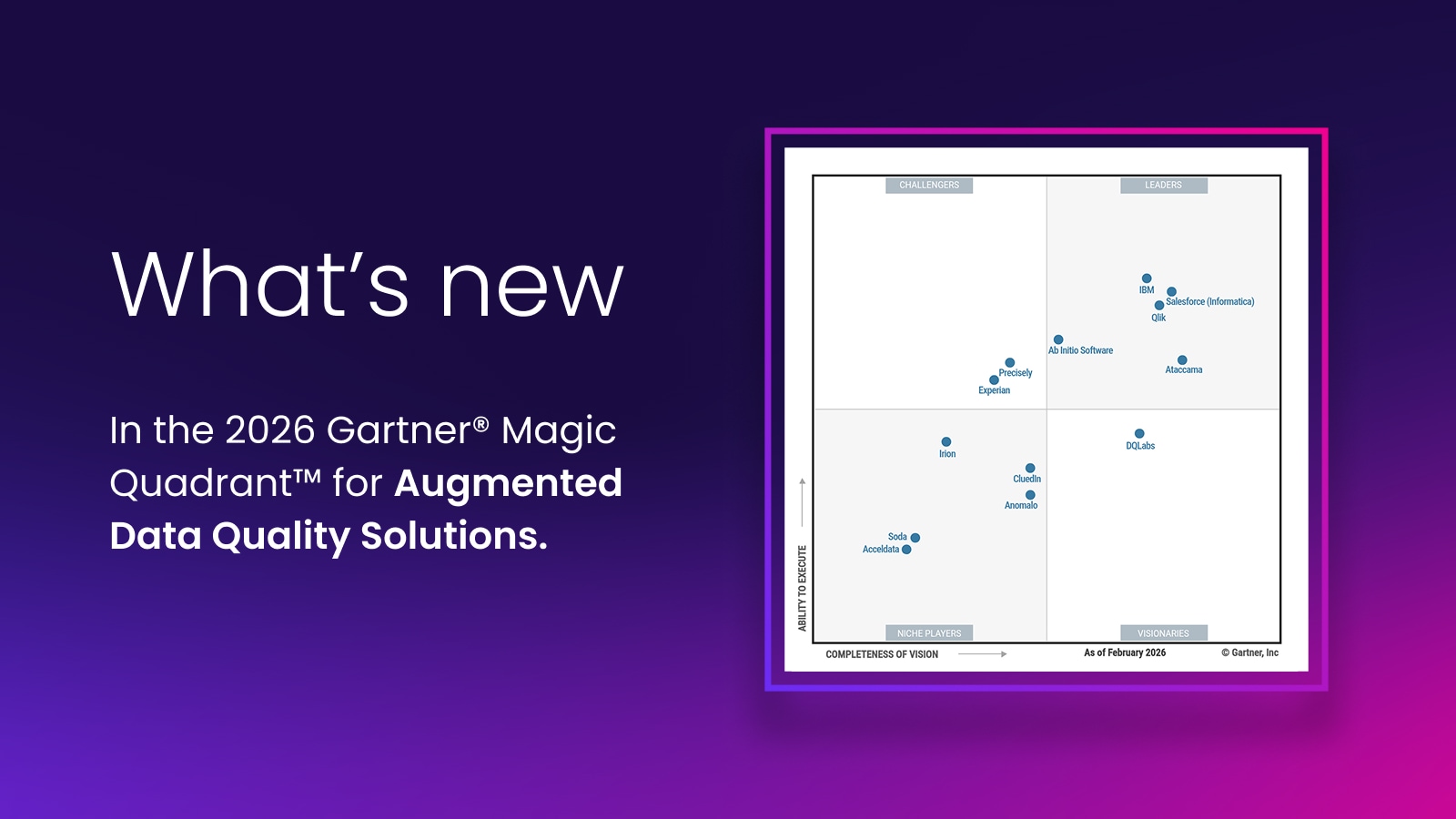

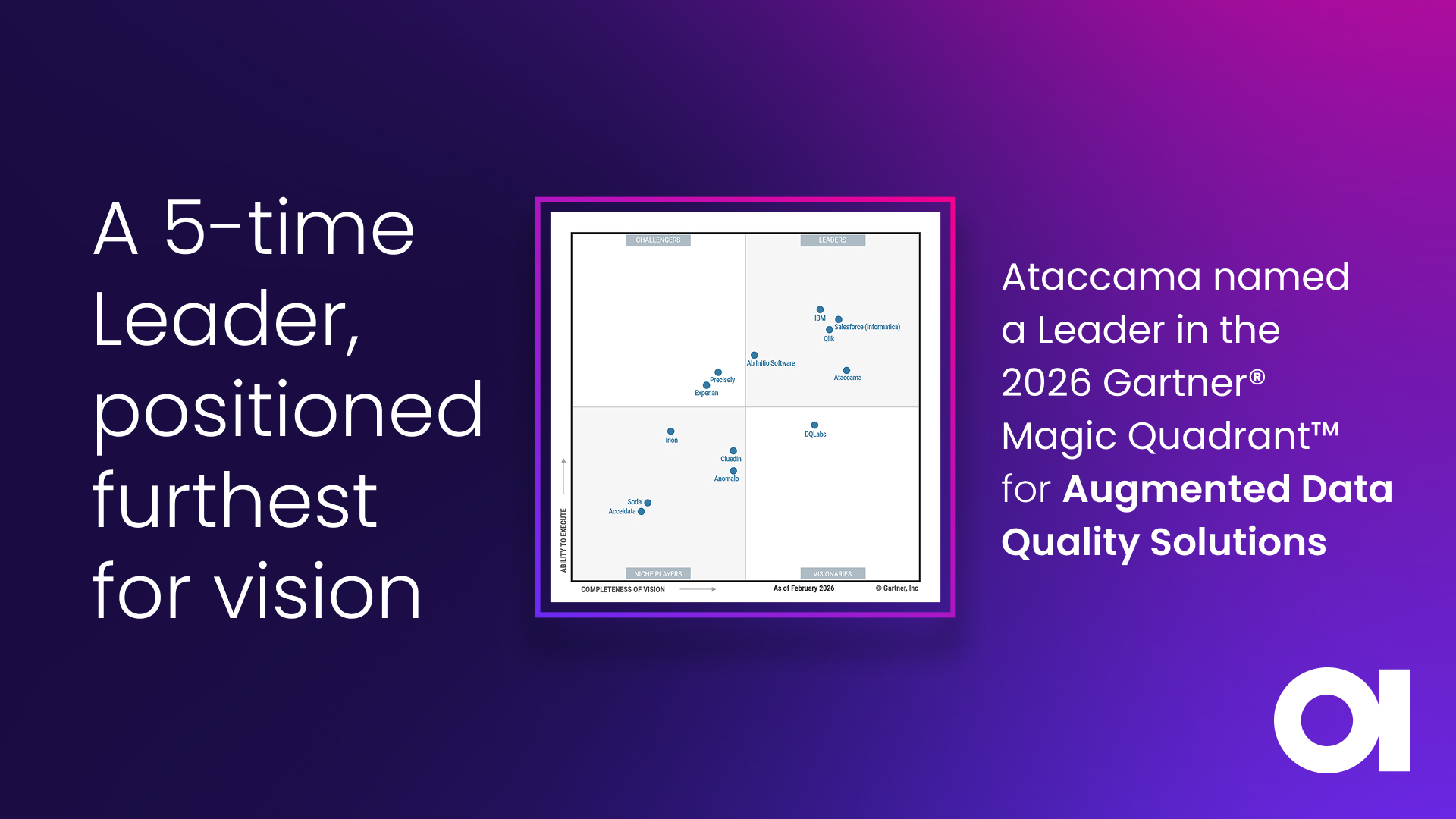

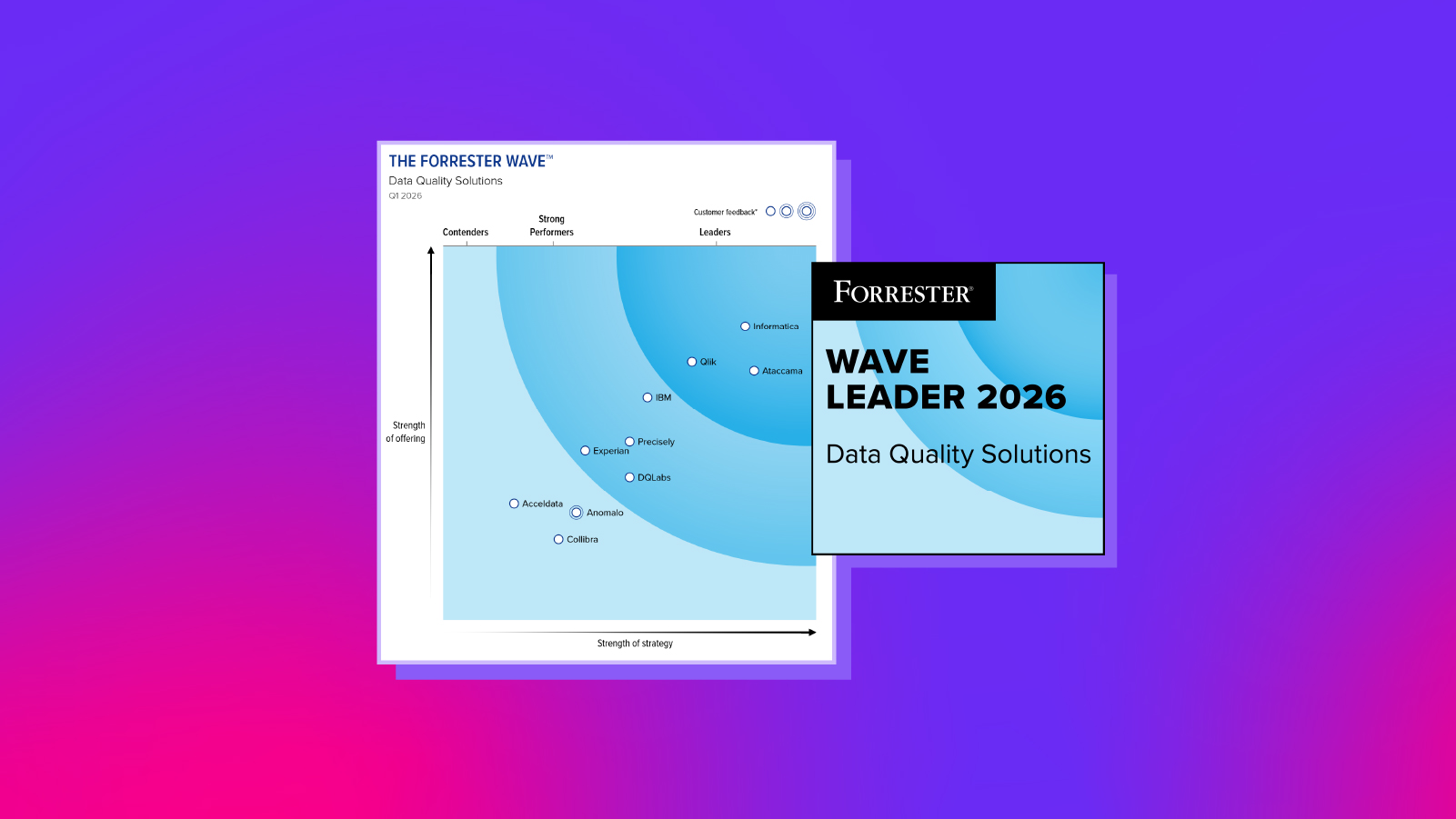

Ataccama has everything you need to set up a successful data quality initiative. Our platform helps facilitate collaboration among teams, reduces data misunderstandings, improves data accuracy and reliability, and supports compliance efforts.

We offer data quality monitoring capabilities packaged with all the features mentioned above in one self-service platform. Contact us today to find out more.