The importance of data quality for the census

From 2020 and beyond, the vast majority of censuses have been conducted online to address the challenges of increasingly large and diverse societies, save millions in the budget for census bureaus, and increase response rates.

The United States Census is an excellent example of what digital censuses will look like in the future. Census bureaus estimate to save roughly $5.2 billion from encouraging online submissions, digitizing paper submissions, and using administrative records as resources for household verification.

However, risks of poor data quality persist despite these efforts and cannot be eliminated entirely. So what steps do governments, census bureaus, statistics offices need to ensure the quality of the census data is as high as possible?

A bit of trivia: The word “census” is of Latin origin. During the Roman Republic, the census was a list recording all adult males fit for military service.

Why accurate census data is important

The census is used for planning, developing strategies, and making decisions. How many people of what age and educational background live in which regions of the country? The census provides these answers and more.

The results of a population census, a register census, and employment statistics present a numerical picture of the population structure, households, and families in the country, municipalities, and even smaller areas. These results form the basis for public administration measures, economic decisions, and scientific tasks. Census data reports can reflect the need for transport facilities for commuters, enable reasonable control of company settlements, realistic zoning plans, and spatial planning measures.

“Our goal for every census is to count everyone once, only once, and in the right place.”

U.S. Census Bureau

With so much of the social policy being shaped by the census, countries are doing their best to modernize and implement data quality best practices to assess data quality metrics even before the actual census.

Modern methods of collecting the census data

Traditional methods of collecting census data are no longer practical or efficient. Why should tens of thousands of counters be hired and mountains of paper filled out if you can manage and process data digitally?

National statistical offices are increasingly switching from paper to digital forms for censuses. Digital forms decrease the chance of error because of built-in validations, format checkers, and dropdowns.

Many countries adopt a combined approach to make the transition smoother: half analog, half digital census. Then the collected data is combined with whatever information is stored in various national registers, such as social security or tax registers. Arguably, the more digitally advanced any given country is (and how closely they follow the data quality best practices), the less data they need to verify through the census questionnaire.

Some European countries, especially Scandinavia, will conduct the next census-based on their registers because they are of higher quality. The Netherlands can even provide data via a “virtual” census. In Holland, most registers are networked, and every citizen is also assigned their data via an anonymous personal code. When someone is born, moves, or dies, all registers are updated simultaneously. Errors can hardly creep in.

Why census data can be unreliable

Outdated siloed registers

Many existing, paper-based registers are full of errors. And that they are by no means able to answer all the questions that would be necessary for an inventory. No register reveals how many children people have or whether their parents have immigrated from abroad. The registration authorities record arrivals and departures, births, and deaths and occasionally report them to the statistical offices. As a result, the data stored in registers might be outdated.

In addition to that, registers might contain conflicting information because not all countries are so advanced to have a single (or distributed) shared data hub. The information is often duplicated and updated in one system but not the other.

Census data collection method

Another reason for discrepancies in census data processing is the data collection method itself. There is a natural tendency for duplicates (the number of duplicates is one of the most common data quality metrics) and other inconsistencies to occur since the data comes from multiple sources, and respondents can fill out the questionnaire several times. Why does this happen?

For example, your partner might fill out the form without you knowing when you have done it yourself already. Some people mistakenly think they have to сomplete online and paper questionnaires.

Here are some other examples of the risks of poor data quality that are likely to occur:

- Non-standardized values from paper forms (names, employment industry, address, and others).

- Non-standardized values from online forms allowed entering custom values and values in the lookups. For example, a date of birth will always be in the correct format because you pick it from a calendar. On the other hand, you enter a name not recorded in any standard name dictionary.

- Characters being misinterpreted or unreadable on paper forms (someone write a ‘7’ that gets mistaken as a ‘1’)

- Inaccurate and made-up values (for example, someone puts their age as 200).

How to make census data more accurate

Raw data is not enough to produce reliable population statistics. Several important steps and data quality best practices are usually necessary to get data in better shape.

Census data digitization

We’ve already mentioned that some census data comes in paper form, and its share could be as high as 25%. It is the job of the census bureau to input these physical entries into their system and unite them with the rest of the data.

This process is called data digitization.

Census data processing

Once all of the data is available in digital form, data processing follows. Typically these include the following:

- Data profiling: Understanding the structure of the census data, the data quality metrics, and uncovering data quality issues, such as non-standard and invalid values, so actionable measures can be taken to prevent the risks of poor data quality.

- Data standardization: Creating lookups of specific data elements and standardizing values. For example, participants could write ZIP codes as “15000” or “15 000.” These entries need to be standardized so the system can recognize these values.

- Data enrichment: Connecting to other data sources, such as national data registers to enrich and validate source data. For example, respondents entered their addresses, names, and dates of birth but forgot to include their SSN. This data can be pulled from a national register-based on the values of other attributes.

- Data matching and consolidation: This involves grouping source records representing the same person and consolidating information into the so-called “golden records,” which would contain the most reliable information about the person. For example, someone accidentally fills in both the paper and digital applications, so you need to consolidate both records.

- Relationship discovery: Detecting members of the same household and other relationships.

Data analysis

After data processing, the census bureaus will know that their data is as accurate and valid as possible. This is the time when data is ready for analysis. Once data quality issues are resolved, census bureaus can produce reliable population analyses and deliver reliable statistics.

Conclusion

Wrong data costs citizens dearly. Social research can only work properly if it knows the size and characteristics of the population. Pension funds and health insurance companies risk their existence using insufficient data. Municipalities can end up with oversized infrastructure if planners work with unreliable data. Tens of billions in expenses could be saved.

Like any massive data collection project, censuses come with their challenges. With proper data quality processes, it’s easier for those in charge of census projects to ensure their data is accurate, representative, and complete. In the modern era of digital censuses, ensuring data quality is one of the most critical steps.

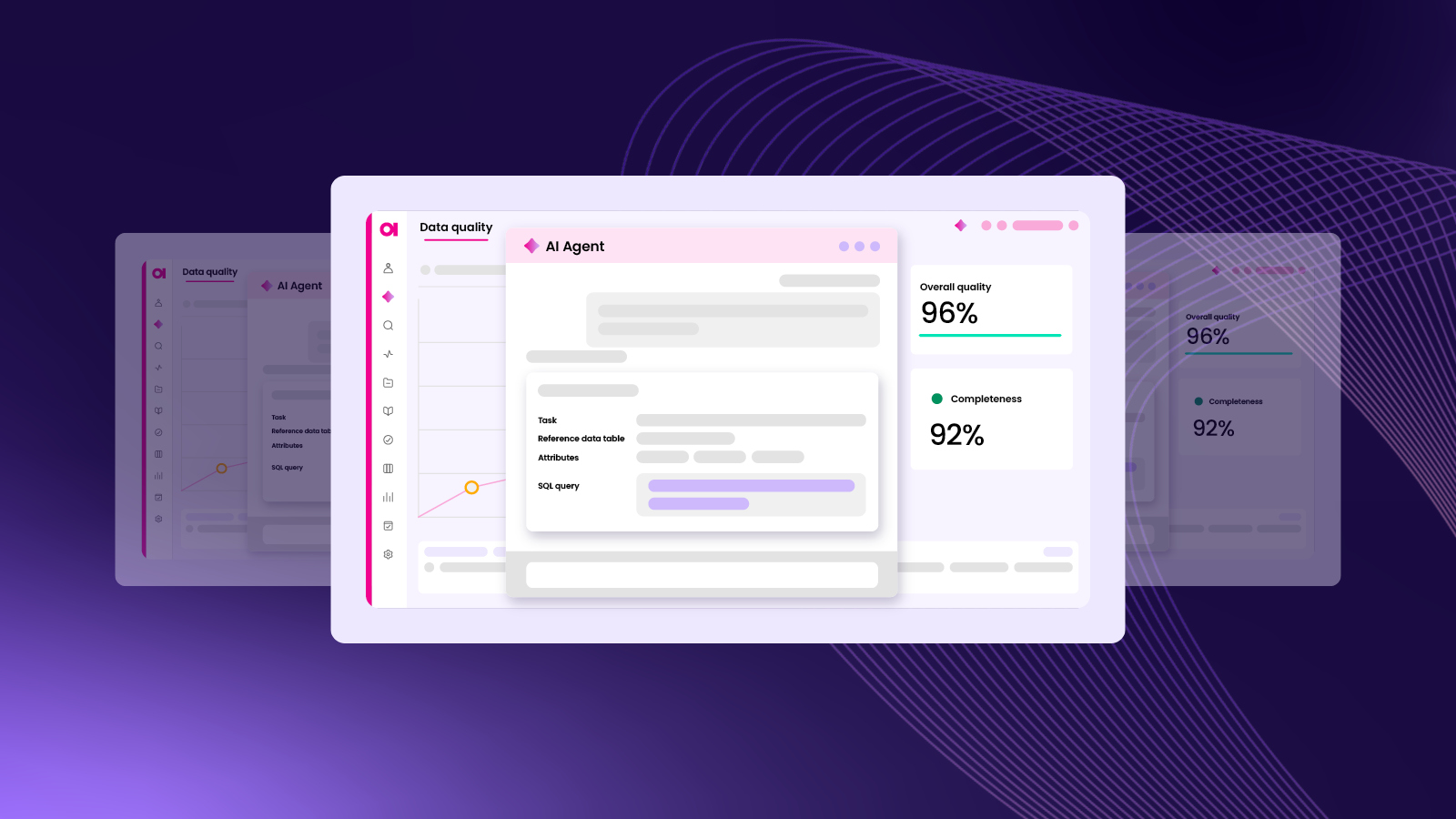

Learn more about our data quality software to see what Ataccama ONE is capable of!