The Evolution and Future of Data Quality

Data-driven innovation is real, and you don’t have to be Amazon or Google to benefit from your data. Even non-global companies in retail, insurance, and other industries reap huge benefits from implementing data quality solutions. By now, data quality has become an integral part of every data-driven company’s data strategy.

But what made this possible?

Huge leaps in the perception of data quality management and technology for its implementation. And there is more to come.

Read on to learn how data quality management evolved through the years and what to get excited about in the future.

Modern data quality is a holistic business discipline

Data and data quality are no longer a domain of IT, DBAs, or “data geeks.” Those days are over. People looked at data quality as a technical discipline because the tools weren’t user-friendly or were only usable through highly technical methods. It is no longer the case.

Now that the business has a much better understanding of the importance of data quality, it’s safe to say that data quality is viewed as a business function, i.e., something necessary for proper business operations. Advanced organizations now implant data quality specialists (or data stewards) within specific lines of business, product teams, or teams responsible for business innovation.

It is great to have new champions for data in the C-suite represented by Chief Data Officers, but the interest doesn’t end with them anymore. Starting a data quality campaign is now on the agenda of CFOs, CEOs, CMOs, Chief Digital Officers, and VPs of Product.

This greater awareness of the importance of data quality and its benefits has slowly brought a change in scale for data quality programs. They have gradually grown from single-department initiatives to enterprise programs (usually part of a larger data governance program), and the ratio is quickly increasing.

The evolution of data quality

As the business took over and started to own data, two things happened in parallel:

- Data quality technology evolved from manual to highly automated

- The scope of a data quality campaign grew from a set of standard functions to what can only be called unlimited

Data quality technology has become extraordinarily automated

Data quality technology has made huge leaps from its SQL-based origins. As more business users started to use data quality tools, the demands on usability skyrocketed. Here is how the tech evolved:

-

- Manual and repetitive. At this stage, data quality tools (or SQL) let you cleanse or validate data in a one-off manner. You had to either write specific code for a specific data source or configure rules in a point-and-click interface and then apply them to a specific data source.

- Metadata-driven. This was the first attempt at automating data quality management by collecting data source metadata and creating reusable metadata-based rules. Configuration and deployment time savings could reach 90% thanks to this.

- AI-driven. As machine learning technology matured and business users embraced data quality, it only made sense to use it to further automate data quality management and augment data stewards’ experience. Machine learning is now used to simplify the configuration of data quality projects by suggesting the rules to use and for autonomous detection of data irregularities, also known as anomalies.

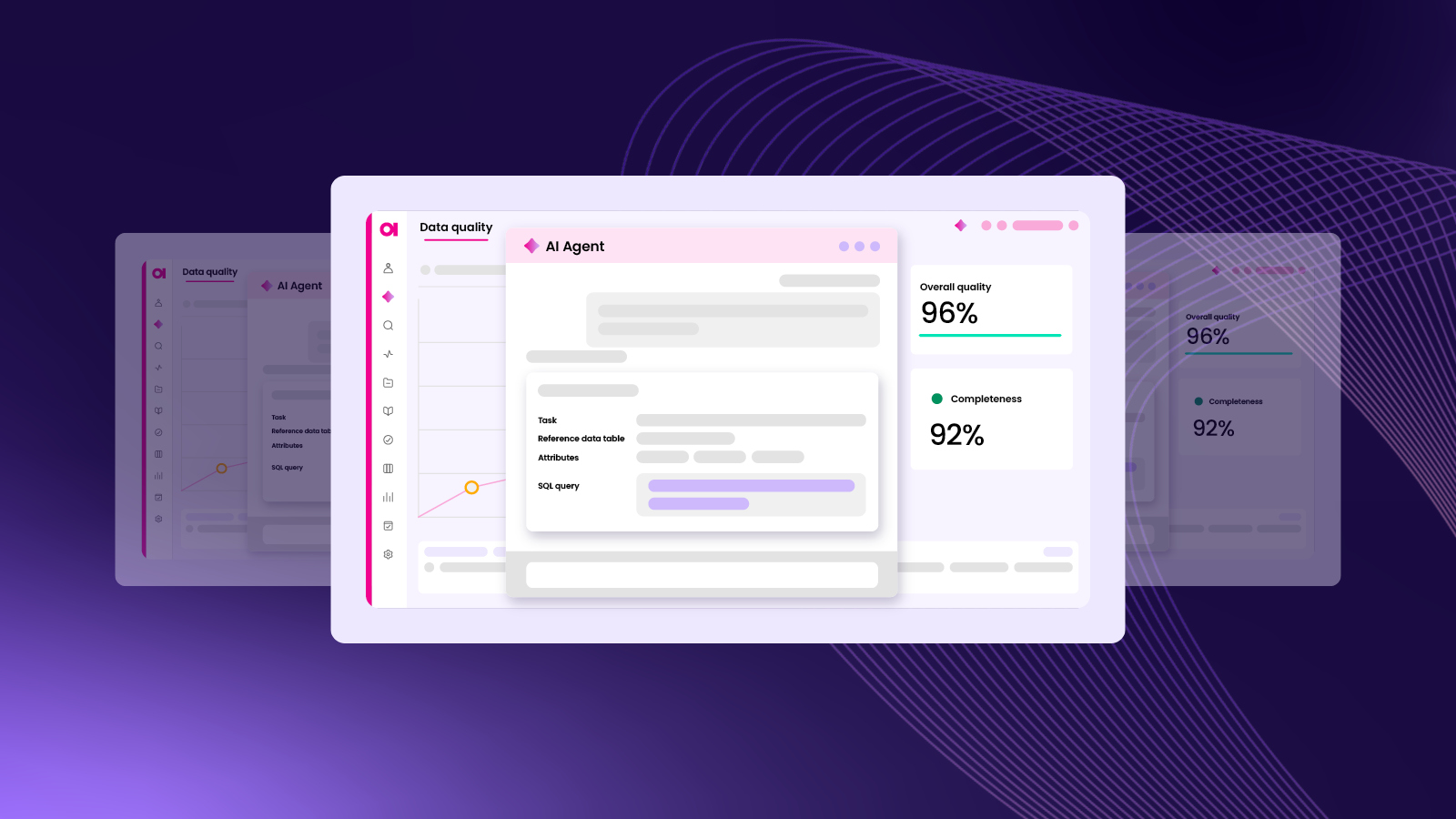

- Data Quality Fabric. Data quality fabric is, at the moment, the most advanced iteration an automated data quality campaign. It sits on the backbone of a data catalog, which maintains an up-to-date version of enterprise metadata, and combines AI and the rule-based approach to automate all aspects of data quality: configuration, measurement, and providing data.

The scope of data quality is unlimited

Data quality is no longer perceived as a set of standard functions like cleansing and validation. Instead, the thinking has evolved to decision-makers and data stakeholders asking themselves this question:

“What do we need to ensure high quality data for our business?”

In many cases, this requires seemingly separate disciplines, such as master data management and reference data management. The importance of data quality has expanded to all of these areas along with others like metadata management. When integrated with data quality, these disciplines produce powerful synergies.

Why master data management is part of data quality

Data quality and master data management have an interesting relationship. On the one hand, functions like standardization and cleansing are vital for efficient master data management. On the other hand, MDM can be viewed as “extended data quality” for certain domains. After all, if you need high quality customer data, you cannot get it without mastering and consolidating data. It’s also true for other domains and use cases where data originates in many different applications and either needs to be grouped or merged into a golden record.

Why reference data management is part of data quality

Reference data management is important for reporting consistency, analytics, and cross-departmental understanding of various business categories. In many industries, heavily dependent on reference data, such as insurance or transportation, data quality management can be greatly simplified if connected to a single source of reference data. It’s easier to validate and standardize data, decrease error rates on data entry, or prepare data for reporting.

How data catalogs automate/simplify data quality

Data catalogs are popular tools in enterprise settings. They give you an overview of available data and simplify data discovery. Data catalogs are often packaged with the business glossary, data lineage, and other tools for comprehensive metadata management. But here’s why it’s more powerful with integrated data quality:

- It’s much better to not only find the data but also see its data quality.

- Browsing lineage with data quality information helps you find the root cause of a problem faster.

- How cool is it to open a business glossary and see data quality for all of your address, customer, or product data? It’s much easier to monitor data quality when you can.

To summarize, any technology, data management or not, that contributes to improved accuracy and reliability of data is on a shared mission to improve data quality.

See the illustration in full resolution here →

The future: more magic

So far, we have looked at how data quality management has evolved over the years in perception and scope. Now let’s dream about what it might look like in the future.

The intelligence of the Data Quality Fabric will rise

As we have established above, Data Quality Fabric is the most advanced step in the evolution of data quality. At its current state, it can automate data quality monitoring and simplify data providing. Now, let’s discuss how the mature Data Quality Fabric will work.

In our final vision for Data Quality Fabric, most data-related requests will be self-service for users and automated for machines. An example of a workflow within the Data Quality Fabric could look like this:

- Ask for data. A person or machine makes a data request.

- Find the data. The Data Quality Fabric finds relevant data sets of the best quality.

- Master the data. The Fabric will decide whether data mastering is necessary (integration and consolidation).

- Transform the data. The Fabric decides what transformations to data are required.

- Provide the data. The Fabric provides the data based on the use case, consumer, and permissions.

You can learn more about the Data Quality Fabric in this primer.

Automate the Delivery of High Quality Data

In the future, there will be no conflicts

In the current state of data quality, several dualities exist that produce a fragmented experience for users or make organizations choose one feature over another. These compromises will not be necessary. The future is AND, not OR.

Non-technical data people vs data engineers

Up until now, data quality tools have been designed either for non-technical data people or data engineers and data quality engineers. The two groups use separate tools or UIs, and their outputs are non-reusable.

The future of data quality management means that one group will reuse or improve the other group’s work. For example, if a data engineer creates a complex data cleansing pipeline, a data scientist will use it to cleanse the data in ad hoc mode for his project.

The magic word here is continuity, and this is what metadata and unified data platforms enable.

Rule-based vs AI-based data quality

Nowadays, the demand for AI-based data quality is huge. Data teams want to automate the detection of issues and even have AI suggest data quality rules. Some go as far as calling rule-based data quality a thing of the past. We don’t think so.

The domain expertise that business users and data stewards have accumulated is a great source of “metadata” to create data quality rules by hand, and it’s also efficient. Once you create a rule, you can store it in a centralized rule library, and everyone can reuse it.

In our opinion, the future is in combining AI-based and rule-based data quality management. The end data consumer will not differentiate between the two: understanding the data quality of a data set presented to them plus transparency into data quality measurement will be enough. One important note here is that AI algorithms will need to be explainable.

Users vs systems as data consumers

As automation takes over more areas of data management, it’s likely that in the future, most data consumers will be machines and algorithms. They will get data via APIs and automate some of the value-added tasks such as report creation. Still, however, data people will be able to consume the same output. So, data quality pipelines should be re-usable for the world of people and machines.

We can dream a bit further on and assume that the AI on the consumer machine side will be mature enough to request an API from the Data Quality Fabric, which will generate it on the fly.

Again, the future is in the reusability of the transformation logic.

Agility vs governance

Modern business value creation and innovation happen within agile product-focused, data-driven, cross-functional teams. These teams develop new hypotheses all the time and need high quality data fast, and bottlenecks in obtaining data are enormously costly for organizations that employ them.

On the other hand, data governance teams care about proper workflows and data security. They want to prevent access to sensitive data and ensure only the data necessary for a given purpose is accessed and used by business teams.

Resolving this conflict requires a high degree of data governance automation, especially in the areas of data access, policy enforcement, and data quality management. With this achieved, business teams don’t have to wait for approvals or worry about compliance and just use the data they find in the data catalog.

To achieve this kind of automation, both teams need to operate within a single data platform that enables governance and data democratization.

Summary

Data quality has gone a long way from its technical roots and limited scope. Modern tools are more user-friendly and AI-augmented. The concept itself is much clearer and more widely accepted by business users and the C-Suite alike. The scope has grown as well. Data quality programs are more often enterprise-wide and involve the implementation of related technologies: master and reference data management and metadata management.

The tight integration of these technologies gave rise to the Data Quality Fabric, a new architecture design for automated data governance and frictionless data providing. Looking into the future, the Data Fabric will become smarter and more powerful, providing high quality data to users and machines faster.

The maturity of data platforms will also satisfy the needs of various types of users and use cases under a single roof. You can expect a continuity of work between technical and business users. AI- and ruled-based data quality management will work seamlessly together. Likewise, users and machines will be able to consume the same data outputs without duplicating data pipelines. Lastly, agile and governance teams will get what they need without compromises.

Join the future?

Are you excited about the future of data quality management? Let’s build it together! Reach out to us with questions, suggestions, and demo requests.

Let’s build the future of data quality management together

The future of data quality management is what you make it.