How Data Observability Helps Data Stewards, Data Engineers, and Data Analysts

Data observability is valuable to data people at all levels of the data spectrum. From the people who create data pipelines (data engineers), to the people who maintain them (data stewards), to the people who actually consume data and deliver analysis (data analysts), all data people can enjoy the benefits of a self-service, fully automated data management tool like data observability. Let’s learn how it can make data people’s lives easier in these three unique roles.

Data Stewards

Data stewards bare an essential responsibility for their data domain. Their colleagues rely on them to communicate about the meaning, source, and use of data. They are the first people other users turn to when experiencing issues with a data set.

That’s why data observability tools are their best friends. They help monitor the health of your data systems and the quality of data in them, allowing data stewards to deliver vital information at a moment’s notice. Let’s learn how.

Data steward responsibilities

Data stewards must guarantee high-quality, compliant, and discoverable data. Some of their most common responsibilities include:

- Subject matter experts. Data stewards must have a comprehensive understanding of all the data in their domain so they can inform users of its characteristics, such as: where it’s stored, where it came from, who has access to it, what the data is about, if it is high quality, etc.

- Monitoring data quality. Before anyone can use data, they need to know if it has issues and if it’s suitable for its intended purpose. Stewards must keep a constant pulse on datasets in their domain to deliver and ensure high-quality data on demand.

- Implementing and upholding governance policies. Data governance is a data steward’s rulebook, enforcing the principles and processes outlined in your organization’s governance framework. They will translate policies to users, control access permissions, define DQ & DG processes, and ensure that all data is compliant and meets the standards specified by the organization (and any relevant legal entities). Data stewards are also responsible for the data definition, can participate in data initiatives, and can propose or vote on data policies.

- Perform root cause analysis. Data stewards must identify and fix existing issues to prevent faulty data from reaching downstream systems. They can flag problematic datasets, contact data owners, and use data lineage to track issues back to their source.

How Data Observability can help

Data observability provides data stewards with automatically updated insights and monitoring capabilities, enabling the proactive management of data quality, making it easier to address issues, ensure compliance, and bolster effective communication with stakeholders. Here is how:

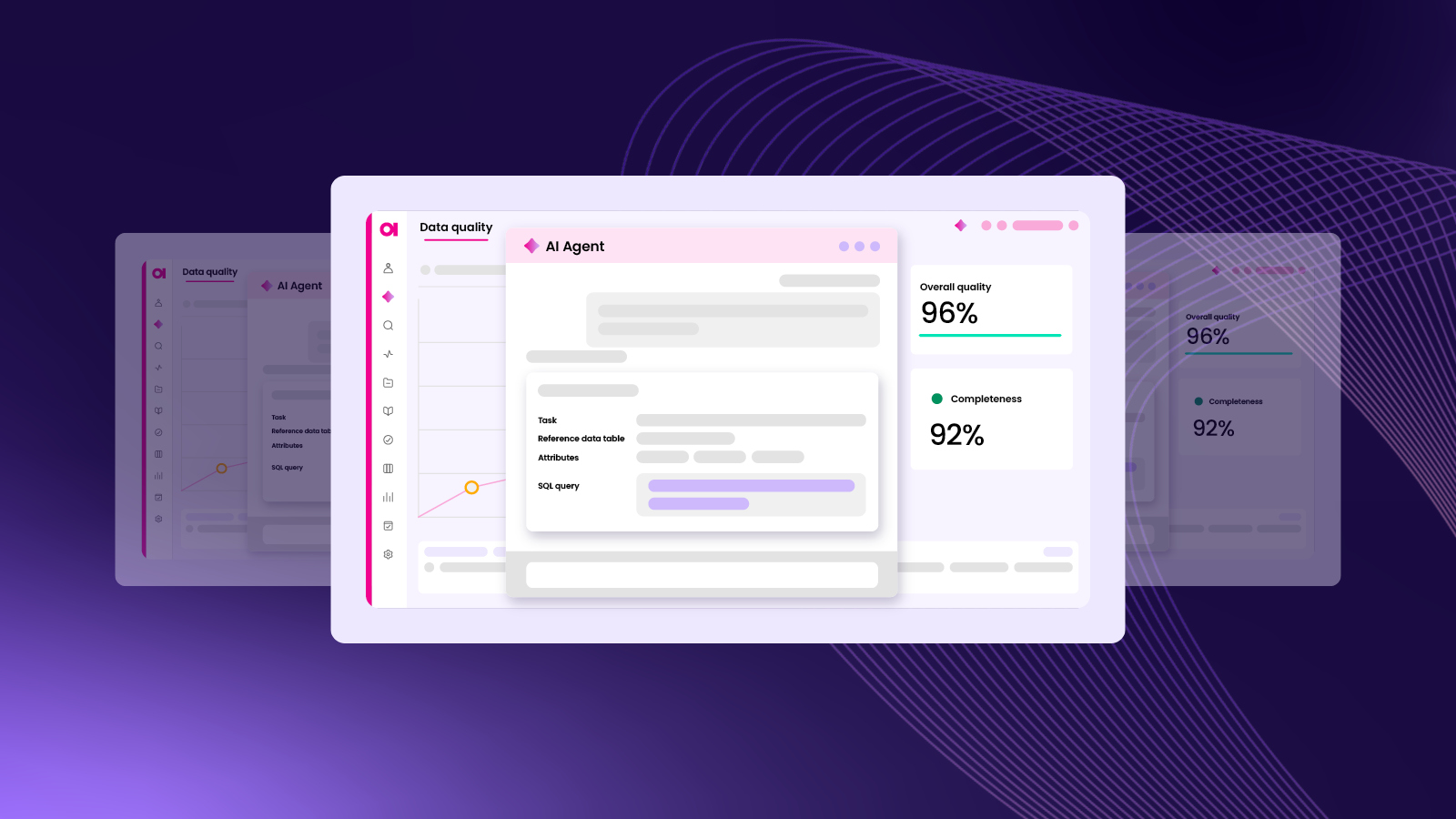

Data quality monitoring

One of the primary jobs of a data steward is ensuring high data quality at the company’s desired threshold. Data observability can make it much easier to keep a pulse on their domain, DQ definitions, and DQ-related KPIs such as accuracy, freshness, completeness, and others. Comprehensible dashboards with DQ information and alerting capabilities are readily available and regularly updated, allowing for near real-time data assessments. This allows for immediate action when issues arise and prevents severe problems from reaching downstream systems, increasing efficiency, predictability, and overall trust in data.

Data lineage and issue resolution workflows

Investigating the source of a data issue, such as identifying outliers, changes in data volume, and schemas, can also be streamlined with data observability. An observability tool will provide a complete lineage of any dataset, keeping track of the source, destination, and transformations. This allows stewards to understand the origin of data and how and by whom it’s used across multiple applications. When a problem occurs, they can use this lineage information to find the cause, assess the impact, and notify responsible stakeholders. If the observability tool has collaborative capabilities, they can also inform the data owner to make them aware and begin fixing this problem.

Compliance

Data stewards are responsible for upholding (and contributing) to company governance policies which describe necessary levels of data quality, how various users and personas should handle data, and how to respond to a data incident in case it happens. Data observability can provide an aggregate view of data quality from a single dashboard, automated, near real-time anomaly detection, and streamlined data discovery for greater visibility into the data landscape. This will help data stewards guarantee to stakeholders and decision-makers that their data is accurate, safe, and compliant. You can also use lineage information to investigate data breaches and get alerts about unauthorized access and privacy risks.

Fostering collaboration and transparency

An observability tool provides a centralized place. All users can access the same data quality information for all their accessible datasets while being sure that what they are looking at is the latest, reliable data. Data stewards won’t need to verify the quality of sets before use because users will already have that information available to them. They can also use collaborative features (like leaving comments and tasks on datasets) to share insights, raise concerns, and collaborate on issue resolution.

Data Engineer

If there’s a problem with your data pipeline, a data engineer will be the one to fix it. They build and are responsible for the systems which collect, manage, and store raw data, mastering the flow from source point to eventual consumption. If there is a bottleneck in the transmission of data or low-quality data reaching downstream systems, they can track the issue and put a stop to it.

Observability tools are valuable to data engineers because they provide the lineage and automate various tasks (e.g., DQ monitoring) which would otherwise have to be done manually. Data observability helps with infrastructure and pipeline management tasks, allowing your data engineers to build robust and scalable pipelines. Let’s find out how.

Data engineer responsibilities

Data engineers build and maintain your company’s data pipelines which control the data flow throughout the organization. Some of their most common responsibilities include:

- Design and maintain data pipelines. Data engineers have to design channels so data can flow frictionlessly while also being complete and up to date. They must know all stakeholders’ data requirements and design systems to deliver that data in the ideal state.

- Troubleshoot issues in data flows and pipelines. Suppose data is moving slowly or losing quality from one location to another. In that case, a data engineer can use data lineage to track issues back to their source and fix any inconsistencies or stoppages.

- Automate manual processes related to data movement. Data engineers must automate processes to improve efficiency, ensure timeliness, and reduce errors. They use programming languages like Python and SQL to write scripts or use specialized data management tools and frameworks. They can automate manual processes like data extraction, transformation, loading, and metadata management.

- Develop and maintain datasets and analytical tools. Data engineers develop data sets through processes like source identification, cleansing and transformation, data integration, and many others. They can also be responsible for the organization’s data management and analysis tools.

- Onboard new data sources. When your company adopts a new data source, data engineers are responsible for integrating it into the system and finding its place in the pipeline.

How data observability can help

Data observability is an excellent tool for assisting in data infrastructure – the primary responsibility of data engineers. Using data observability makes their pipelines more efficient and self-service. Pipelines built with observability are more accessible to a broader range of users. They can grow and expand frictionlessly along with your data sources and business. Let’s find out more about how data observability helps data engineers.

Optimizing pipeline performance

Data observability doesn’t just provide automated updates on the health of your stored data. It can also deliver overall metrics of your pipeline and systems. It can give information about:

- Data throughput. The rate at which data can be transferred or processed within a data pipeline.

- Resource utilization. How various resources (employee time, processing power, storage space, etc.) are used within the pipeline.

- Latency. Time delays between the data being sent and its arrival at the destination.

By analyzing these metrics, they can make educated assessments of their pipelines. Data observability tools will tell them if a particularly slow process or step is in the pipeline. They can correct it by adjusting configurations, allocating resources, and other tasks that enhance overall performance.

Issue resolution, lineage, and root cause analysis

With the advanced data lineage provided by observability, data engineers can track data flow and find the root cause of errors. They will also have insights into how the lineage of data impacts downstream systems (i.e., if a source has a DQ issue and is delivering invalid data), an understanding of how data changes in sources, and how those transformations affect other system components.

The level of in-depth information at every stage of the data journey allows data engineers to make more informed decisions about vital processes like data integration, data model changes, system upgrades, disruption minimization, and data integrity assurance.

Data validation and quality assurance

Data observability allows you to set up quality assurance checks at various stages of the pipeline. It will monitor your specified DQ dimensions and alert you about anomalies in the data in or near real-time. Instant alerts will notify the data owner and any other relevant stakeholders if changes occur and enable them to troubleshoot the issue to ensure the data infrastructure’s smooth operation. With thorough checks like these, data engineers should have no problem vouching for data quality as it moves downstream.

Scalability

One of the most important jobs of a data engineer is onboarding new data sources and getting them adapted into the data pipeline. Observability makes that as simple as a click of a button. You only need to configure connections to your data sources once and let data observability take care of the rest. Thanks to AI, all your data sources will be automatically profiled and monitored, and ideally, in bulk. Additionally, a powerful data observability solution allows data engineers to onboard any new data source at any time while enabling them to reuse existing configurations – instead of setting them up from scratch.

But the scalability benefits don’t stop at onboarding! Observability allows engineers to monitor the scalability and capacity requirements of their pipelines. With this information, they can assess resource utilization, predict growth patterns and plan accordingly. This will help them identify bottlenecks in scaling and help proactively allocate time and resources to increasing data volumes and processing needs.

Data Analyst

Data analysts are responsible for turning your raw data into actionable decisions for your company. They look at massive tables of seemingly incoherent data and find links and patterns, developing models to predict future trends and behaviors.

Data observability helps data analysts by making their everyday tasks more straightforward, easier to access/understand, and by streamlining various processes like checking data integrity, data discovery, and collaboration between data users. Let’s learn more about how data observability benefits data analysts below.

Data analyst responsibilities

Data analysts must work with technical and business users to generate actionable insights from an organization’s data. They are consumers of data and need sets that are relevant and of the highest quality. Let’s look at some of their responsibilities.

- Translating business questions. Whenever business-minded individuals have a question that data can answer, it’s up to the data analyst to format a data analysis project which can answer that question. They must define the scope and approach and deliver actionable insights for the business department. The business department might develop data-related KPIs with data analysts, holding the analyst responsible for using these KPIs to assess/monitor performance.

- Prepare datasets. If a data analyst needs to use a dataset that isn’t prepared or complete, it falls on them to repair/prepare it. They can perform basic data preparation tasks like joining, transforming, standardizing, or cleansing data sets. All data sets need to be fully prepared before they can be analyzed.

- Understanding their data domain. Data analysts must clearly understand the data in their domain (or the entire business data, depending on the size, how many analysts work there, etc.) They must be aware of the data’s profile, any anomalies, and the history/lineage of the data asset.

- Analyze data. Data analysts must collect and analyze large amounts of data to identify useful trends and patterns. They do so by using statistical and machine learning techniques and developing models which can be used to predict future trends and behaviors.

- Communicate findings. Business users might not have enough domain knowledge to fully understand the key takeaways from a dataset. It’s up to the data analyst to communicate that information in a digestible format via visualizations, reports, or presentations. They also need to work with technical users whenever they need access to a dataset or if a data problem needs to be solved/discovered.

How data observability helps

Data observability enhances the effectiveness and efficiency of data analysts in their tasks and responsibilities. It enables them to deliver trustworthy insights and expedites data-driven decision-making processes.

Less data preparation and easier discovery

Data observability gives data analysts a clear view of a dataset’s quality and other key metrics before they even open it. This will help them choose the right sets for their projects and avoid problematic sets which require lots of preparation. With automated metadata tagging, similar sets will be easier to discover and group. Data observability also helps them interact with data in a self-service manner, helping them query the data, visualize it, and uncover patterns that might not be immediately apparent. They can conduct ad-hoc analysis, track high-level KPIs, gain new insights, and discover hidden opportunities or correlations in the data.

Issue resolution and automated data quality monitoring

If problematic data reaches an analyst’s projects, it can hurt the results. Data analysts can use data observability to get alerted when an issue is discovered within one of their sets. They can use it to monitor pipelines and detect anomalies, inconsistencies, missing data, etc. It will help them understand how data is collected, transformed, and processed through different systems. Adept lineage uncovers all relevant data sets in different systems that could be useful for analysis.

Collaboration between technical, semi-technical, and non-technical users

An observability tool offers a shared dashboard that any user can easily interpret, regardless of technical expertise. It’s also easier to set access permissions so analysts won’t have to wait to get access to a set before they can get a project started. Collaborative features like annotations and lineage (to find other stakeholders) allow analysts to communicate with each other and other users directly inside the data sets. Task management capabilities enable them to follow issues back to their source and alert the relevant stakeholders.

Increased efficiency

Automated monitoring of data quality-related processes enables analysts to quickly take action and troubleshoot issues as they occur before they negatively impact business reporting. Having visibility into logs and error messages, notifications, lineage, and others, analysts can efficiently address performance and data inconsistencies issues. All this automation and ability to react promptly reduces analysts’ need for manual intervention, freeing up their time to focus on their core responsibilities.

Conclusion

Data observability is an important tool for data people because it closely mirrors their responsibilities, providing them with the information they need to do their jobs successfully. It helps on a continuous basis, which allows them to see patterns in data, isolate issues, make relevant improvements, and grow their efficiency and effectiveness.

With a tool like Ataccama ONE, the observability feature is also scalable, which is vital for any growing organization. It is AI-driven and enables the set up of bundled domain detection rules and continuous, bulk monitoring of your systems – avoiding time-consuming manual rule setup every time your company introduces a new data source. Schedule a call with us today to learn more!