There’s no shortage of data in our data-driven world. You can find it stored in data warehouses, data marts, data lakes, mainframes, cloud CRMs, databases, and, yes, Excel spreadsheets.

The problem is the data you need may not be conveniently stored in just one application. It may be scattered across multiple data sources and exist in a frustrating mix of different formats. Or worse, you can’t be sure if it even exists.

Assuming you can still trace your information to a given data lake, there’s no guarantee it won’t be difficult to locate or understand.

Where does one go to easily find trusted data? The data you require to successfully manage large projects, or simply execute routine tasks.

The data catalog.

What is a data catalog?

A data catalog is a centralized solution providing authorized users quick access to your company's most current and reliable business information. It serves as a record of all data and data sources in an organization.

It allows business and technical users to search, request, and receive datasets required to complete daily business tasks, manage projects, and generate analytical reporting.

“..data catalogs offer a fast and inexpensive way to inventory and classify the organization’s increasingly distributed and disorganized data assets.”

Gartner’s Augmented Data Catalogs: Now an Enterprise Must-Have for Data and Analytics Leaders

How does a data catalog work?

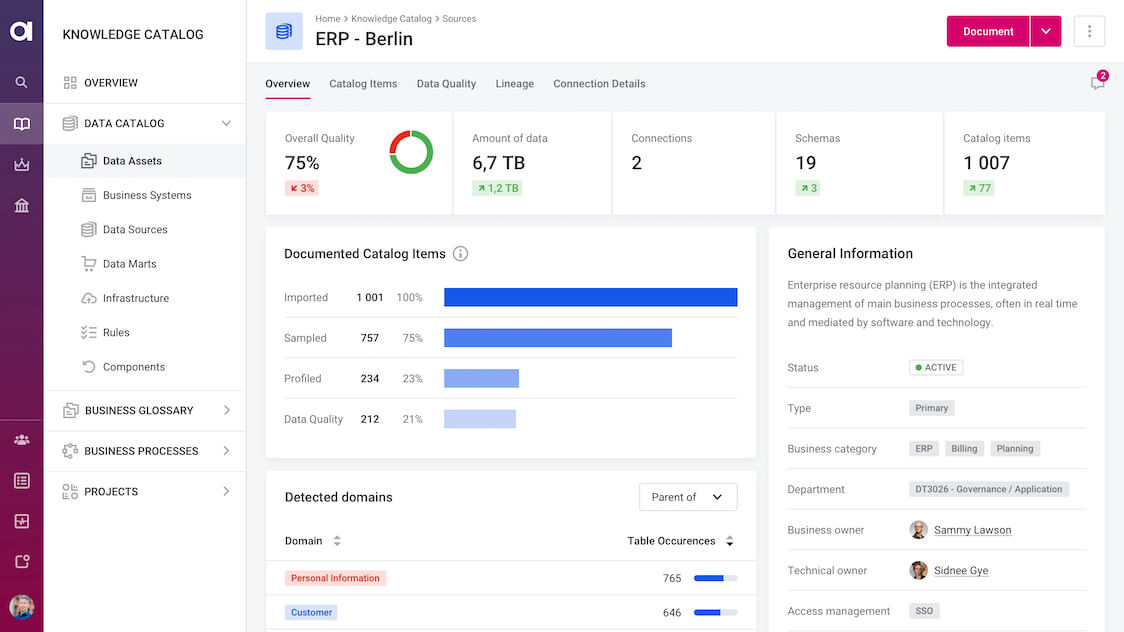

A data catalog connects to your data sources, extracts information about the data inside, and stores it in an orderly manner, making it easy to filter and locate.

We call this extracted information metadata, often referred to as “data about data.” The more advanced the data catalog solution, the more metadata the catalog is capable of capturing, extracting, and storing. If “smart” enough (or AI-enabled), a data catalog can even generate its own metadata.

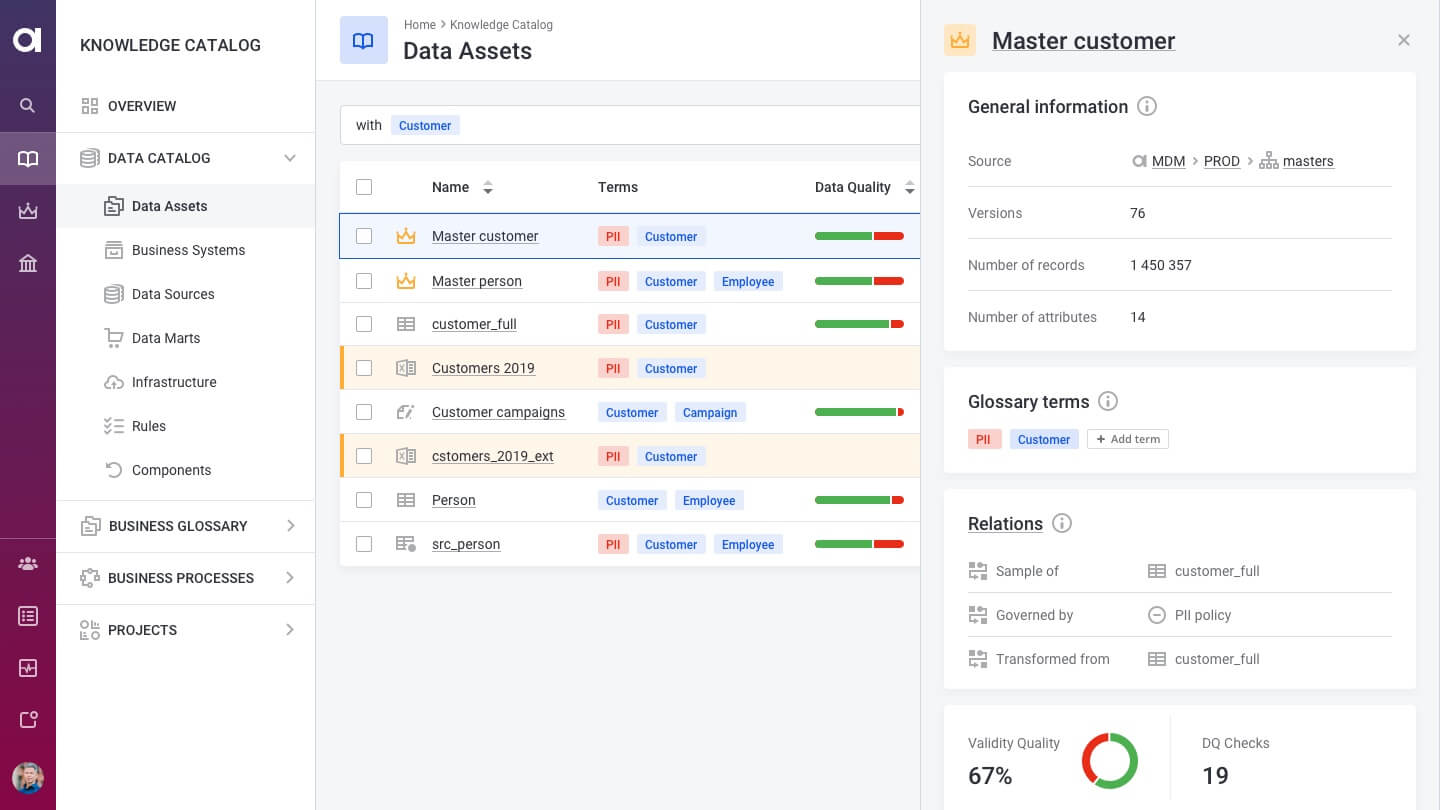

You can call this process documenting a data source. Here is how a documented data source might look like:

What kind of metadata can a data catalog store?

We can classify metadata into two categories: technical and business.

Technical metadata examples:

- The number of records and columns in a dataset

- Data types as defined in the data source, such as string, integer, varchar(25), etc.

- Names of schemas, partitions, table, and attributes as seen in the data source

- Primary key and foreign key indicators

- Constraints

- Table and attribute descriptions imported from the data source

Business metadata is much more diverse and includes any information that end users would find useful when looking for the right data.

Business metadata examples:

- Business terms and definitions

- Titles and descriptions

- User-defined tags

- Business rules

- Data owners

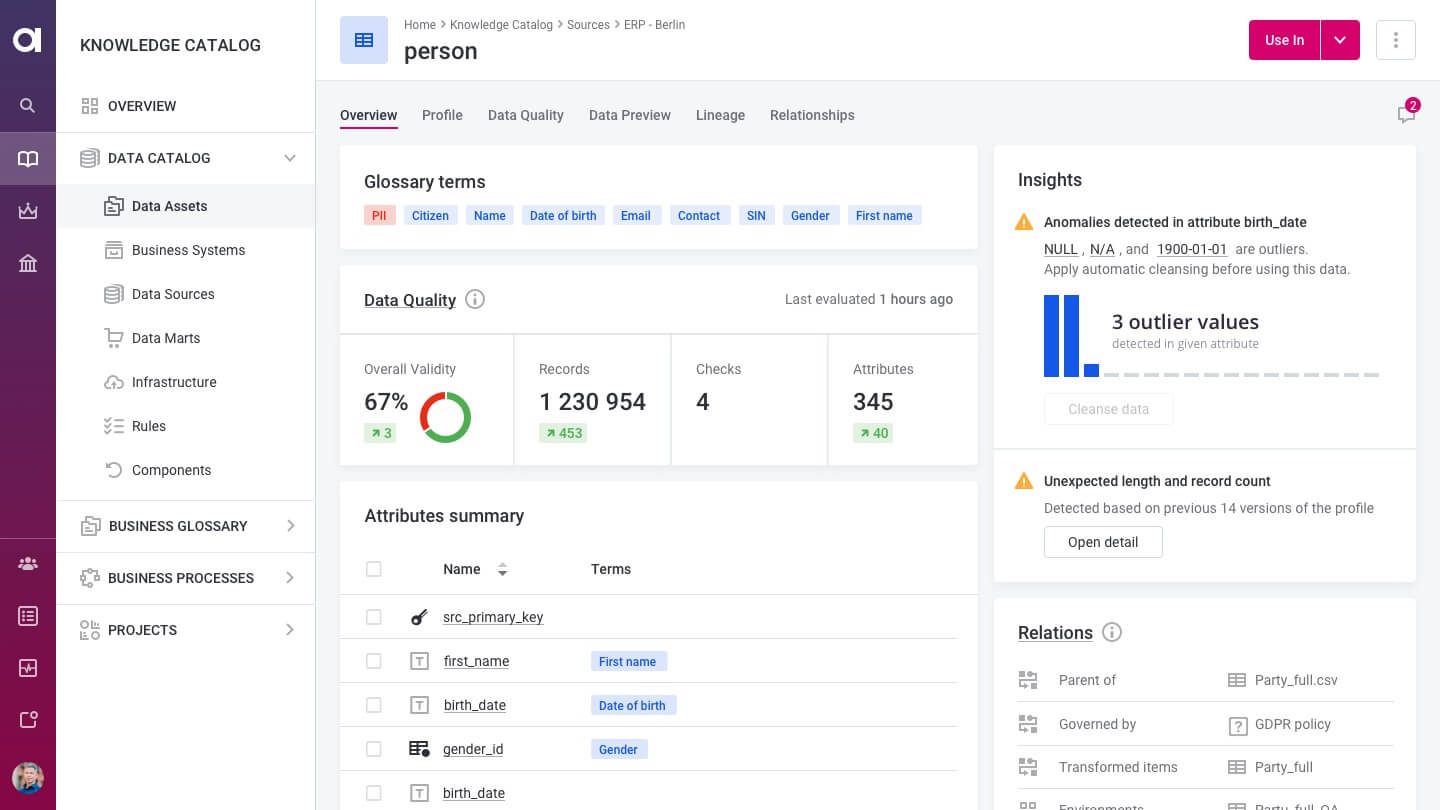

Here is how this metadata can be displayed for a specific data set in a data catalog:

Additional metadata contains more granular details about data stakeholders, including original source system locations, comments from users on use cases and suitability, and versioning histories that track ongoing edits, modifications, and changes in ownership.

Modern data catalogs can also track data quality, generate data lineage, or enable users to wrangle and prepare data. Using AI for automating various use cases is not uncommon either. These include:

- Detecting irregularities in a data set that has been recently updated

- Adding business terms and tags to new data sets

- Detecting potentially related data sets

- Improving search experience

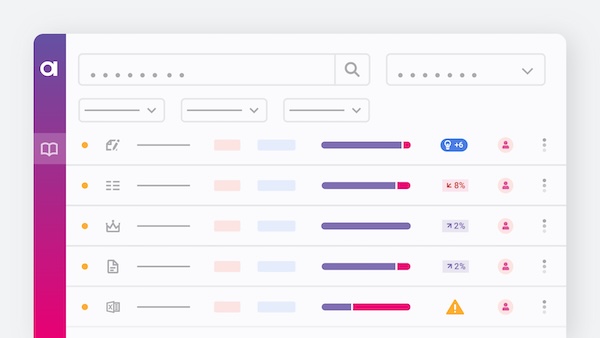

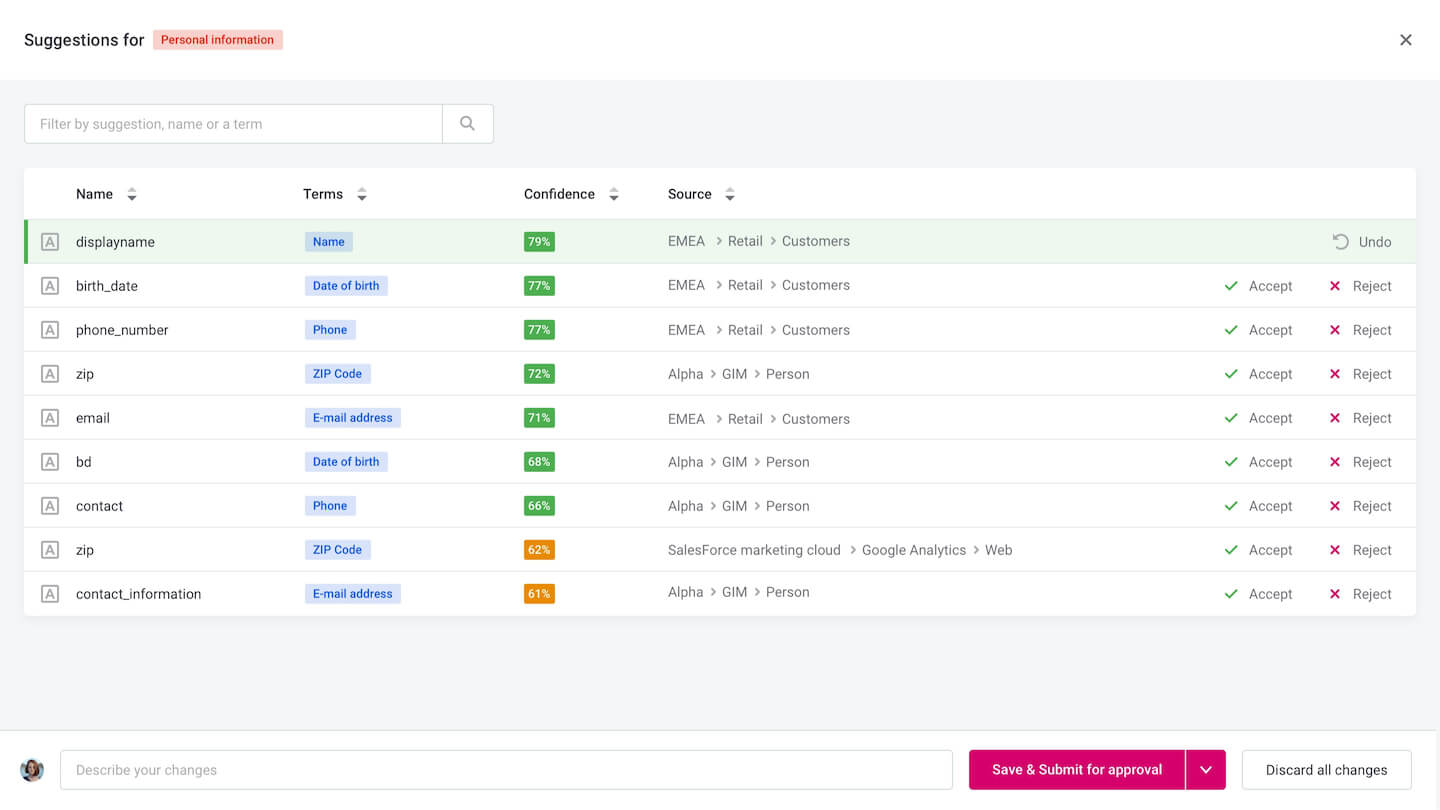

Here is an example of suggestions for “tagging” data generated by an AI-powered data catalog based on how users tagged other data sets.

Data catalog benefits

Data catalogs enable various users within an organization to do their work faster and better.

Here are some examples.

Reducing the time it takes to find the right data

By now, It’s a well-known fact that data scientists spend 50 to 80% of their time locating, accessing, and preparing data before they can use it. Cataloging critical business data enables data scientists and other data-dependent users to find the right data faster thanks to all the available metadata. They can instantly see the source, data sample, and data quality characteristics to understand whether the data set they found fits their purpose.

Additionally, they can consult data lineage for more context or use AI-powered relationship detection to find similar or related data assets. Having a centralized place for data discovery helps these users to eliminate the bottlenecks associated with a lack of trust in data or lack of visibility into the organization’s data landscape.

One such important database is sales leads that can originate on multiple platforms and marketing channels. Exporting leads from LinkedIn Sales Navigator or other such lead generation machinery to a data catalog can save users time by eliminating the need to manually search for and extract data. This can increase productivity and enable users to focus on more important tasks.

Accelerating data governance

The catalog’s stored metadata is key to beginning a data governance initiative. It helps create a baseline for stakeholders and data governance activities by providing insight into the current state and nature of an organization's data, how it is collected, created, managed, and where it overlaps.

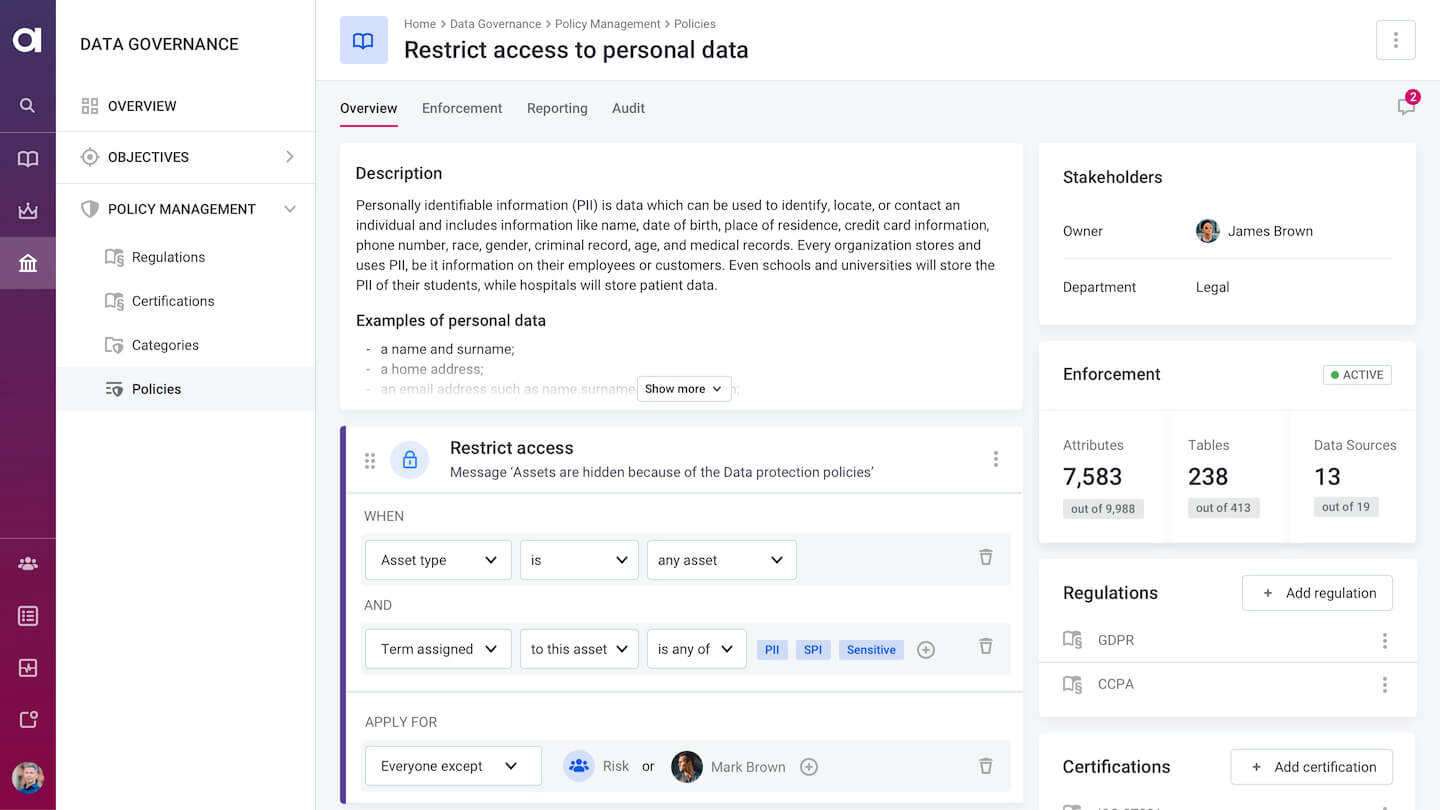

Additionally, data governance frameworks and policies can be documented (and even enforced) in a data catalog. This brings us to the next benefit.

Facilitating regulatory compliance

A data catalog is a great tool for managing data privacy and protection requirements. One way it helps is by letting data protection officers catalog and manage regulatory requirements like GDPR and CCPA. It also enables them to generate regular reports of PII data locations. They can track irregularities and immediately address these issues with data or system owners, i.e., sensitive data appears where it shouldn’t.

Impact and root cause analysis

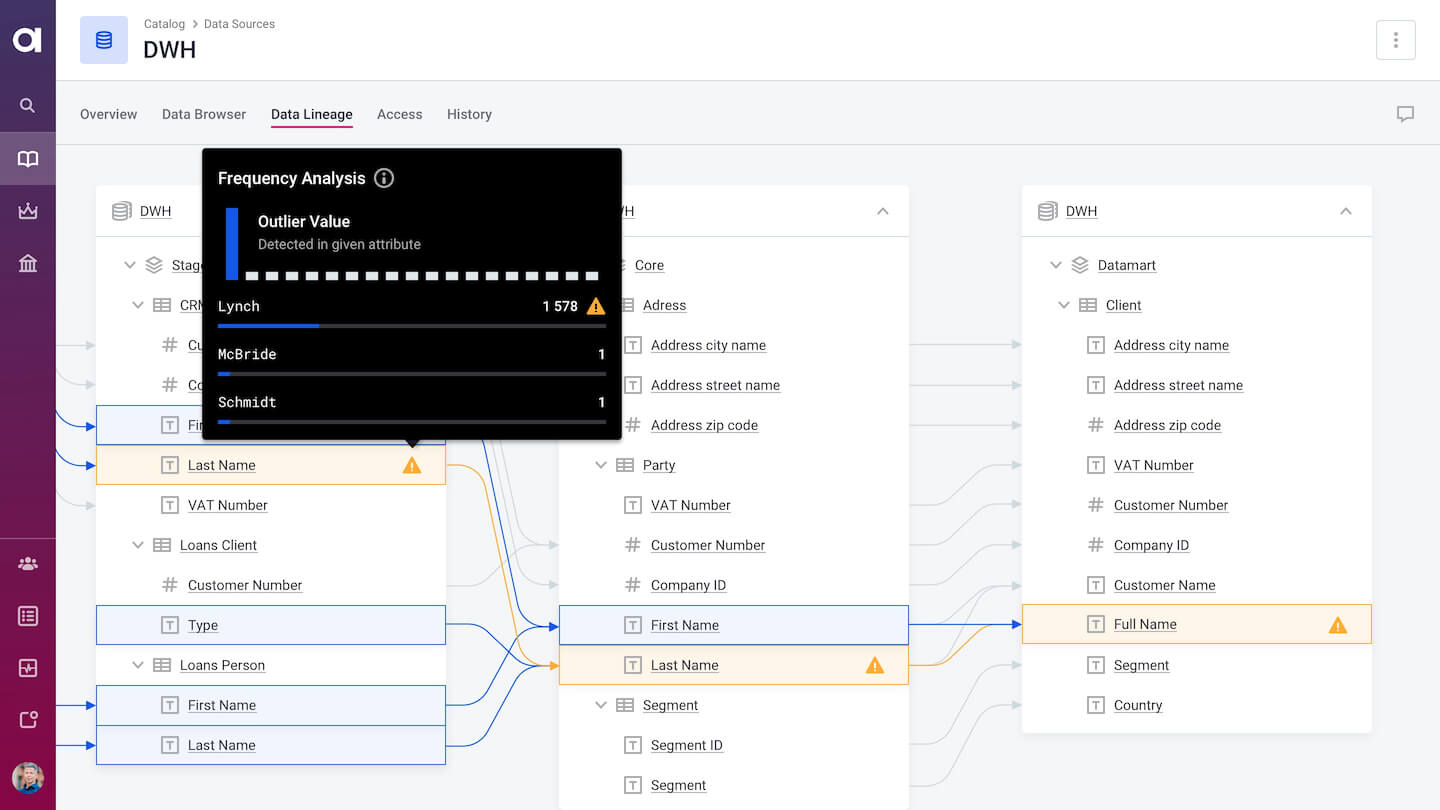

The bigger the data catalog becomes, the greater its outreach in assessing the impact of changes to a given dataset. By closely examining the metadata relationships within a particular dataset, data engineers and IT can determine the impact of change on downstream reporting tools and other systems based on changes to a given dataset.

Likewise, if an adverse event did happen, a data catalog can help track its root cause. For example, the numbers in a new quarterly financial report don’t make sense. In this case, a business analyst can look at the data lineage for this report and spot an anomaly or DQ issue that “broke the report.”

The must-have features of modern data catalogs

Traditionally, data catalogs have been all about collecting as much metadata as possible about data and making it easy to find with search and filtering. These features are still critical today, but data catalogs have gone a long way since then in several ways. First, the amount and types of metadata that catalogs can now capture and store. Second, the automation they now support. And third, how they have converged with other tools and activities, such as data quality and data preparation. Thanks to these innovations, data catalogs have not only become more user-friendly but also much more useful.

Let’s take a look at the features you should be looking for in a modern data catalog:

- Data discovery and metadata capture: Comprehensive data discovery is dependent on flexible connectivity to all necessary source systems, including applications and databases. Given the variety of data sources, modern data catalogs should provide a number of pre-built adapters to enable easy integration.

- Search and filtering: Search is still arguably the most important feature of data catalogs. If implemented well, it allows users to productively explore and quickly find the datasets that are relevant for them. While both simple and complex search requests should be supported, it is even better if AI is used to give users relevant suggestions.

- Business glossary: Business glossaries let organizations document their most important business terms and agree on their meaning, and it’s common for modern data catalogs to come with business glossaries out of the box. This integration enables both business and technical terms to be assigned to any cataloged data assets manually or automatically. Next-generation data catalogs also allow associating data quality rules with business terms to enable automated data quality monitoring

- Data quality monitoring: Inventoried datasets benefit from ongoing data quality checks. Who wants to use data riddled with duplicates, missing values, and formatting inconsistencies? This is an advanced feature that very few data catalog solutions can boast.

- Data Lineage: Data lineage tracks the origin, destination, and transformation of any data asset in the data catalog. As mentioned earlier, users can use data lineage to help track and understand data changes as part of data impact analysis or root cause analysis. It is also useful for preparing reports mandated by regulations like BCBS-239.

- Social collaboration: Given the size difference between the typically smaller group of dataset creators and the larger consumer community, collaboration between the two is essential. Features such as commenting, upvoting, and sharing help speed up data adoption and give users an organic way to provide feedback and curate datasets.

- Data marketplace: Once opened for business, the data catalog is not only a central place for users to find data but also a resource for internal customers to download data for productive use in other applications and reporting. However, it is critical that data access be governed by prescribed policies that have been applied to data domains and role authorizations.

- Customization: Every organization is different and deals with unique metadata varieties. That’s why data catalogs need to be flexible enough to enable the management of any kind of metadata, not just source systems and data lakes. These could BI reports, APIs, or data processing servers. Support for adding custom metadata attributes is critical, too.

Take a look at Essential Features of Data Catalogs for an in-depth look at these features.

Recap and concluding thoughts

Data catalogs provide a convenient means of locating useful datasets for data people of all kinds. Given the variety of features that modern solutions provide, data catalogs save precious time for data scientists, speed up and automate data governance, facilitate regulatory compliance, enable root cause and impact analysis, and much more.

Looking at the growing demand for data democratization and data enablement, next-gen data catalogs nicely fulfill it with the inclusion of such features as data preparation, data quality monitoring, and data marketplaces, as well as integrating AI- and metadata-based automation.