Two fundamental parts of most data management solutions are the data catalog and data quality tools. The data catalog allows you to locate and keep track of all your data, while data quality tools will ensure it looks and works the way you want it to.

While both of these functionalities can work independently, working cooperatively has many advantages. Namely, the data catalog can lead to better automation of data quality tasks, making them less time-consuming. Read on to learn more about how the data catalog and data quality tools benefit each other.

How a data catalog and data quality work together

The data catalog serves as a central access point connected to all your data sources. Data isn’t stored in the catalog, but it makes it easier to locate, scan, and understand for all authorized users.

One of the best functions of the data catalog is that automated processes and users can assign business terms to data, sorting it into categories and giving it meaning. After that, the AI functionality of the catalog can locate similar data and automatically apply these terms to it. AI can also assign them to the incoming data.

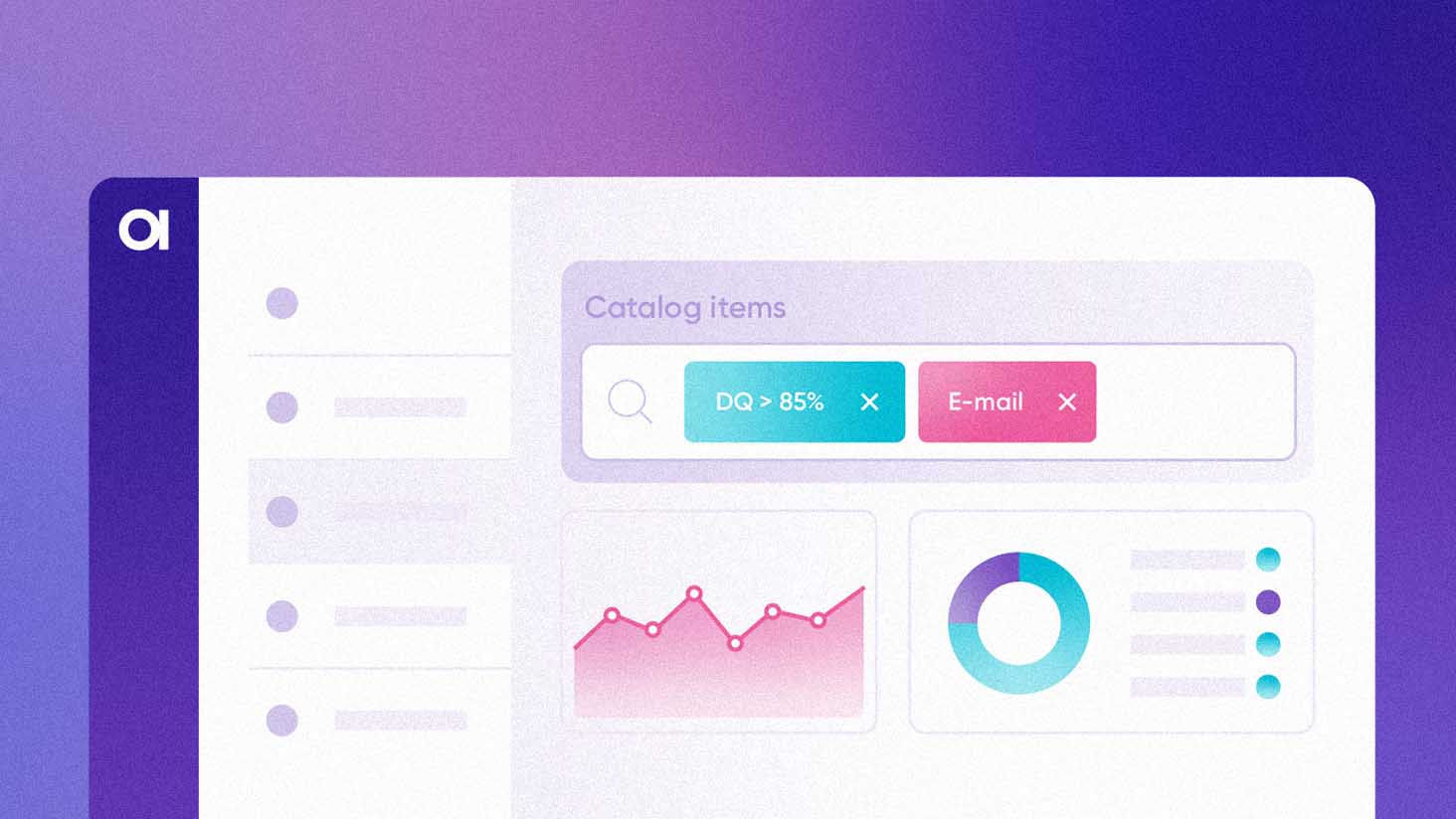

This is helpful for data quality automation because you can run data quality (DQ) processes on any data with a specific tag instead of manually applying data quality rules to particular data sets. The catalog can automatically apply the necessary rules based on whatever business term you’ve tagged to the data.

For example, let’s say you want to validate email addresses used by different departments. You will assign several rules to the tag “email” in the catalog, and then it will automatically apply it to any data with that tag.

The tags created within the catalog have many advantages for your DQ initiative. It makes the entire process much more scalable and efficient.

#1 Automating data quality monitoring

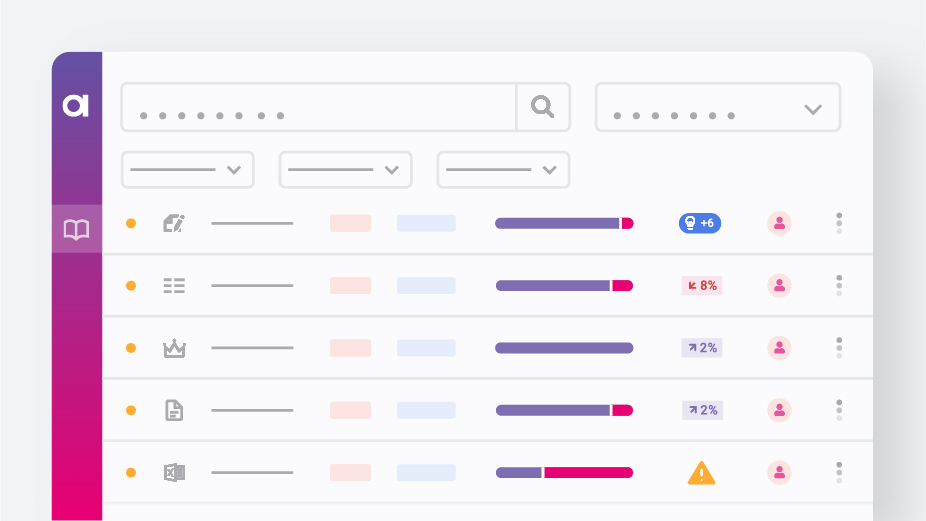

Suppose, as a data steward responsible for the email data, your use case monitors email data quality issues in every possible business system. It is much easier to do with the catalog because you already have readily available attributes and metadata of interest. Also, without any configuration needed, the data catalog will recognize anomalies, such as unexpected changes in minimum average or sums for transactional data.

Beyond traditional data quality monitoring, the catalog performs all these tasks and presents the data quality metadata. This synergy allows anyone exploring or discovering data in the catalog to see its quality. Data quality information then stays up to date together with metadata. One of the most common examples of automated DQ is data observability (see how it works here).

#2 Improving data discovery

Data quality and the catalog can also work together to help you find the best data sets for your project. You’ll know which sets you need from the business terms applied in the catalog and which are the highest quality from your data quality tool.

Perhaps the best part is that you can assess the quality of data you didn’t even know you had. Since the catalog can automatically apply business terms to data, you can run a DQ evaluation of all data tagged with a specific term and then evaluate data you didn’t tag yourself or maybe didn’t know about.

#3 Streamlining on-demand DQ evaluation

Suppose you need to know the data quality issues of a specific data set that hasn’t been checked before by connecting it to the catalog. In that case, most of this information will already be available. You can click a button and evaluate data quality for any data set.

It also allows you to do these evaluations in one tool instead of bouncing from a profiler to different data storage systems and work with isolated data quality management tools. You can quickly adjust terms and rules and apply them to attributes in the catalog.

#4 Simplifying data preparation

When preparing data without the catalog, you would have to locate all the data and understand it on an attribute-by-attribute basis. The catalog allows you to find data of interest immediately and presents you with the metadata needed to decide what should be prepared and what kind of preparation is necessary.

You will also be able to work with the data sets that you find and transform them according to your needs. The catalog lets you find the data, evaluate its quality, and prepare it. For example, data profiling from the catalog might reveal that certain emails in your registry have the prefix ":mail:to:" attached. You can then run transformations to remove the prefix.

#5 Helping discover root causes

One of the excellent data catalog tools is root cause analysis through data lineage. Once you integrate data quality into your data lineage, it will tell you information about your data’s quality throughout its lifeline, from its creation to its storage in your system and its eventual use in your business.

You can use data lineage to trace problematic or low-quality data back to its source. Once you find what’s causing the drop in quality, you can take proactive steps to correct it.

Learn more about augmented data lineage in this article.

Conclusion

Syncing data catalogs with data quality tools is essential to any successful data management project. Keeping them connected has numerous benefits, including time savings, reduced risk of errors and data quality issues, and increased data accuracy. Whether it’s DQ monitoring all in one place, making on-demand DQ evaluation easier, finding what data needs preparation, or discovering problematic data sources, it is all much more efficient and smooth when the catalog and DQ work together.