We live in an age of data proliferation and innovation. While big organizations are sticking to their on-premises data storage solutions and growing their data centers, they’re also experimenting with cloud solutions. Some, in an attempt to streamline their operations and focus on their core business, have moved their entire IT infrastructure off premises.

As these companies embrace digital transformation and become data-driven, they increasingly generate, collect, and use vast amounts of data spread across teams and departments. Locally stored Excel spreadsheets and CSV files, data warehouses, Google Drive, Amazon S3, Azure, Hadoop, Snowflake, ERP systems, data in cloud applications—you name it. While data scientists try to make sense of all this data and give businesses actionable insights, they spend at least 50% of their time finding, cleaning, and preparing data.

One lake for all the data

Data lakes partially solve this problem: data from all kinds of sources is available to those who need it and admins manage access rights for just one system. However, while data analysts and scientists have all the data at their fingertips, they ask a different question: “Which of these tables actually contain the data that I need for my analysis?” Data lake or no data lake, data scientists spend too much time on non-essential activities.

A data catalog to rule your data lake

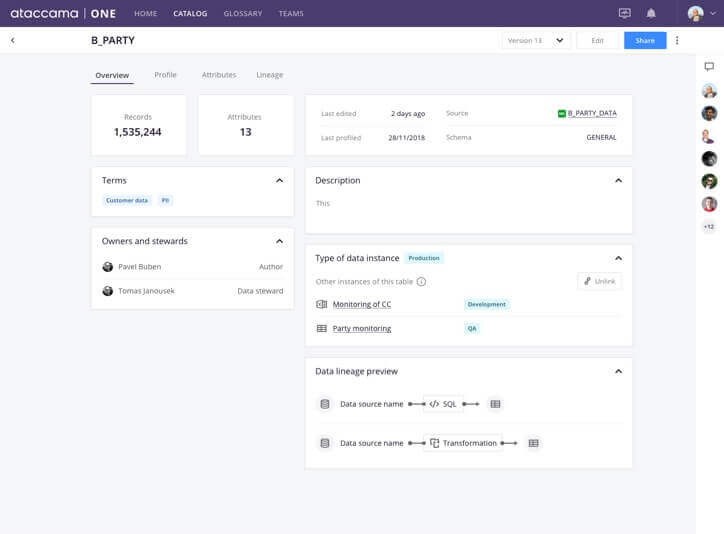

This is where the data catalog comes in. A data catalog is the Google (or Amazon) of data within an organization. It is a place where people come to find the data they need to solve a business or technical problem at hand. The catalog does not contain the data itself but rather information about the data: its source, owner, and other metadata if available.

What happens when you populate your data lake with data

Let’s take a look at a typical scenario of populating a data lake, the inevitable problems that ensue, and how implementing a data catalog can help eliminate them.

During data lake implementation, the Data Management team wants a remote Accounting Department to join the initiative and export their data. The Accounting Department exports its data to Hive via partitioning.

Problem 1: Metadata is lost or unavailable

After the data has been loaded from the Accounting Department to the data lake, all the internal knowledge about that data is either missing (because it was never there in the first place) or lost, for example:

- What is the quality of this data?

- Where is this data used?

- Who is the owner of the data?

- What is inside this data?

- What is the source of this data?

- How often is this data updated?

Solution: Metadata management

Once you add a data source (like the Accounting Department’s database), it is necessary to add all of the important metadata about that source: the owner, business terms and categories associated with the source, the origin of the source, and any other metadata important for your organization. As a result, data scientists can search and filter by this metadata and find exactly the data they are looking for.

Crowdsourcing metadata and getting all teams and departments on board is key to catalog adoption. When everyone knows that all data is cataloged, they are much more inclined to use it.

Problem 2: Sensitive data may get into the data lake

Because new columns can be added to the Accounting Department’s database tables, new data—including sensitive data—can be loaded into the data lake without anyone’s knowledge. This problem is especially relevant for streaming data, where no strict interface is defined for incoming data.

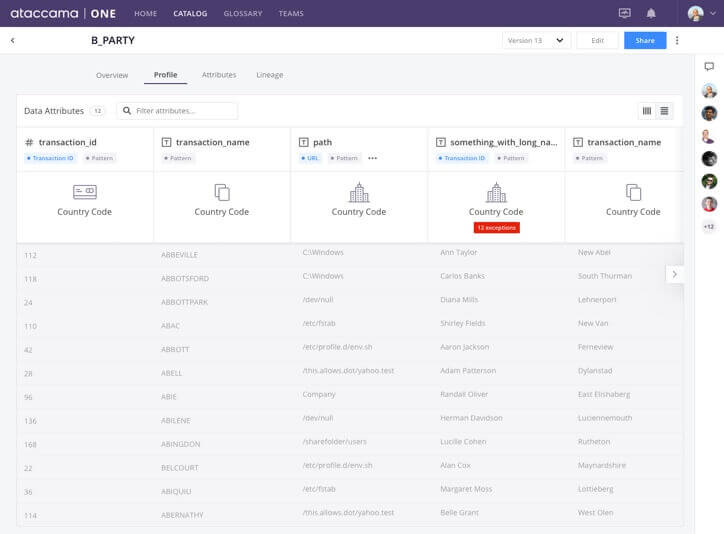

Solution: Automatic profiling, tagging, and data policies in the data catalog

When new data gets to the catalog, it is automatically profiled and tagged to retrieve its semantic meaning. With permissions and data policies in place, when a specific kind of data is detected (for example, a column with salary data), that data is masked, hashed, or hidden for the majority of users. Only users belonging to management groups would see this data.

Problem 3: Data quality is unknown

When a user directly utilizes a data set from a lake, they don’t know its quality. Either they have to profile data on demand in a standalone tool (which just takes time) or they use unreliable data in their analysis.

Solution: Profiling, data quality, and data preparation features

When a user finds a data set in the data catalog, they know exactly what the quality of that asset is. Thanks to data profiling they can see duplicate, empty, non-standard cells in any column and judge whether to use that data set or not. If a data catalog has data preparation capabilities, the user can immediately cleanse and wrangle the data set and make it usable for their analysis.

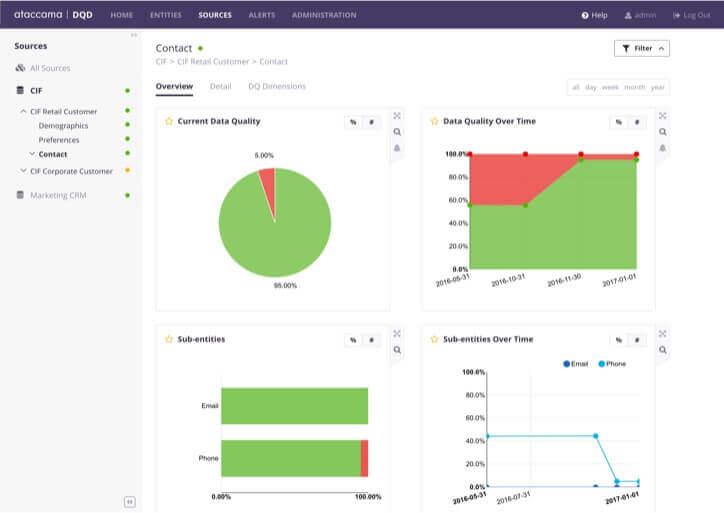

Another example that would ring a bell for most enterprises is a report generated on a regular basis, for example, every week in Tableau. The problem, in this case, is that the underlying data for that report may change. Without monitoring the quality of that underlying data, it is impossible to know whether that report is trustworthy.

Solution: Data quality monitoring as part of the Data Catalog

To prevent a report being generated from dirty data, it is necessary to do three things: inventory reports in the data catalog, establish basic lineage between the underlying data and reports, and set up data quality monitoring. The win is likewise threefold: business users can easily find reports, data stewards can react to changes in data quality in the underlying source systems, and data engineers always know which report is affected if they make changes to a table in a database.

Problem 4: No understanding of data origin and relationships with other assets

A lot of transformations take place inside the data lake. Data scientists transform data and store new tables in the data lake. When data is exported to data warehouses, it is transformed there, too. When users find assets in the data catalog, they want to understand where this asset lives in that transformation cycle. For business users, it is important to understand which data went into the creation of a report. For data engineers and IT, it is important to understand what reports will be affected if they make changes to a table. For data scientists, it is important to use the most relevant data set in their analysis, so they need as much information as possible about its origin and further use.

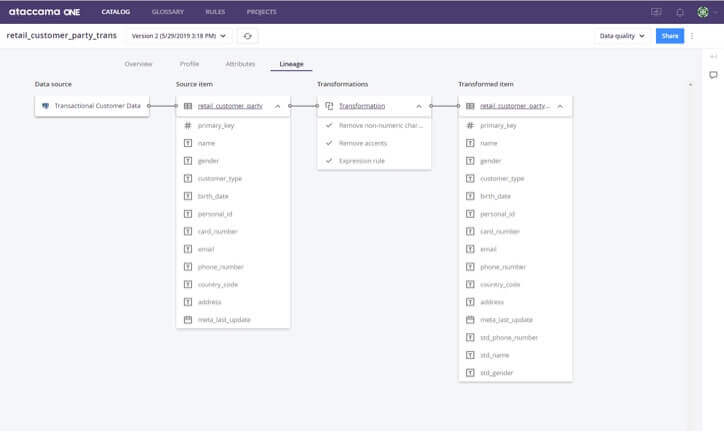

Solution: Data lineage and automatic relationship discovery

Data lineage is a hot topic in the data management industry, and every vendor has a different approach to providing this feature. Some methods are platform-specific: parsing SQL logs and Spark jobs or integrating with ETL tools via REST API. One more way is to let users construct lineage manually.

These methods are complicated to implement and can never construct lineage for 100% of cases. SQL has many flavors and some of it is just impossible to parse. A Spark job might get deleted even if the table it produced still exists. ETL tools have different approaches to storing information about their transformations, which are hard or impossible to access.

At Ataccama, our approach is platform agnostic and generalist, as our ML and AI algorithms detect similar data assets, foreign key–related assets, source assets, and all of the places where an asset is used. We also construct lineage for transformations happening inside of our platform.

Conclusion

Implementing a data lake is a massive undertaking, not only from a technological standpoint but also from a data governance one. Data lakes are a great solution for making data available to the people that need it, but they inherently contain several deficiencies and bring about a number of problems that make them less usable and secure than they could be.

Data catalogs address these problems through automatic and manual processes that enrich data sets with a variety of metadata and form useful, protected, and audited data assets out of them. Ultimately, everyone is happy: business users can find the right information easily, data officers know that all sensitive is at their fingertips and available only to the right users, and data engineers and architects understand the relationships between these data assets.